A web application has to be deployed to a web server to make it accessible to users.

The process of deployment is different for every application. Every application uses different technologies of different versions, and with a distinct set of libraries to achieve their functionality. They also have different types of configuration at runtime.

Are you keeping up with new developer technologies? Advance your IT career with our Free Developer magazines covering Angular, React, .NET Core, MVC, Azure and more. Subscribe to this magazine for FREE and download all previous, current and upcoming editions.

The deployment server should have the right set of software installed to support the application. Even though cloud providers like Azure support almost every platform in the market, one might still encounter a situation that makes him or her think “That works fine on my computer, what’s the problem with this server?”.

The container approach solves this problem by providing an isolated and more predictable platform. This approach got a lot of popularity due to Docker. Today many developers and firms are using Docker.

This article will introduce you to Docker, and demonstrate how to deploy an Angular application in Docker and then deploy it to Azure.

Environment Setup for Angular, Azure and Docker

Here are some pre-requisites for this article:

- Node.js – Download and install it from the official site

- Angular CLI – It has to be installed as a global npm package:

- > npm install -g @angular/cli

- Docker – Download and install docker from the official site or install Docker toolbox if your platform doesn’t support Docker

This article will not show how to build an Angular application from scratch. It uses the Pokémon Explorer sample built in the article Server Side Rendering using Angular Universal and Node.js to create a Pokémon Explorer App to deploy on Azure.

To follow along this article, you may download the sample and run it once on your system.

Getting Familiar with Docker

Docker is an open source platform that provides an environment to develop, test and deploy applications. Using docker, one can develop an application much faster and deploy it in an environment that is similar to the environment where it is developed. Because of the reduced gap between the development and the runtime environments, the application is more predictable.

Images and containers are the most important objects to deal with while working on Docker.

An image contains a set of instructions to setup the container and run it. In general, an image extends an existing image and applies its own customizations. For example, an image to run a C program will be extended from an image that comes with an OS, and will install the environment required to run the C program.

A container is the running instance of an image.

The container is isolated from the other containers and the host system (The system on which Docker would be running). The container is created based on the Kernel of the OS on the host system. So, the container is extremely light in weight and it doesn’t contain any software installed on the host.

On top of the kernel, it installs the software configured in the docker image and either uses the storage from the host system itself, or stores the files inside its own context based on the instructions in the image. The container stays in running mode as long as the program installed in the container runs or till someone stops the container.

Because of being light weight, it is easy to spin up or bring down a container. The process of creating or deleting a container is much faster when it is compared to VMs.

Since VMs consume a lot of memory on the host system, they require stand-alone operating systems which involve license cost and it takes time to bring them to an up and running state. On the other hand, a container doesn’t install a new OS, it gets ready within a few minutes using the host’s kernel and the only thing to be done is to install the software required for the application.

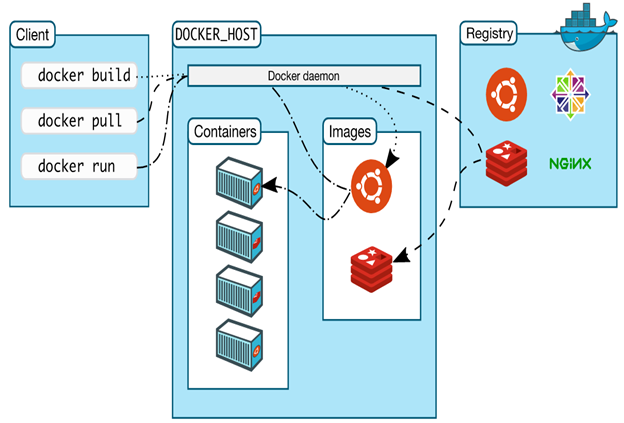

Docker consists of three components. Host, Client and Registry. The following diagram shows the way these components work together:

Figure 1 – Docker architecture (source: https://docs.docker.com/engine/docker-overview)

Client is the command line interface used to interact with Docker. It is used to manage images and containers on the host. Docker provides commands to interact with the registry and these commands use the Docker API.

Host is the system on which Docker runs. The images and containers are created on the host machine. The host contains Docker daemon, that listens to the Docker API requests and manages the containers and images. As the diagram shows, the daemon sits at the center of the client, containers, images and the registry. All the requests pass through the daemon and the results of these requests are stored on the host system.

Registry is the hub of docker images. Docker Hub is the default public registry. Anyone can use the public images available in this registry. Docker can be configured to use any other registry like Docker Cloud, Azure Container Registry or your company’s own registry.

Getting your hands dirty with Docker

If you have installed Docker, it should automatically start running in the background on your system. Check it on your system tray and if it isn’t running yet, then start it from the start menu.

Open a command prompt and run the following command to check if docker is running:

> docker -v

If Docker is in a running state, it will display the version of the Docker installed. The best way to check if the installation works correctly is by running a container. Docker provides a Hello World image that can be used to test if Docker is running correctly. To get this image and run it, run the following command

> docker run hello-world

The output produced by this command is similar to Figure 2:

Figure 2 – Docker hello world

The list of operations performed by Docker are quite visible in the log printed on the console.

In Figure 2, Docker tried finding the image hello-world on a local machine. Since the image was not present on the local system, it pulled the image from the registry. Then it executed the commands configured in the image. The commands printed the Hello World message and the list of things that Docker checked on the system. To run it again, run the same command and now it will not pull the image from registry, as it is already available on the system.

To see the list of images on the system, run the following command:

> docker images

Once you run an image, it creates a container. The list of containers currently running on the system can be viewed using the following command:

> docker ps

This command may not produce any output if there are no active containers. The list of all the containers on the system can be seen using the following command:

> docker ps -a

The hello-world container stops as soon as it prints the messages on the console. Let’s look at an image that keeps running unless we stop. Pull the nginx image.

> docker pull nginx

The docker run command needs a few parameters to run this container. The following command shows these parameters:

> docker run -p 8080:80 -d nginx

The following listing describes the options used in the above command:

- The parameter -p is used to pass port number. The nginx container runs a web application on port 80 inside the container, it has to be mapped to a local port number. 8080 is the port number on the host through which the application running in the container is made available

- The -d option runs the container in detached mode. This mode makes sure the container exits when the process used to run the container exits.

Once this command runs, you can open a browser and change the URL to http://localhost:8080. You will see an output similar to the following screenshot:

Figure 3 – nginx output

Now the docker ps command will list the nginx container, similar to the following figure:

Figure 4 – Docker ps

The container can be stopped by using the name or the first few characters of the container ID. The following commands show this:

> docker stop 2b3e

Or

> docker stop zealous_curran

Running Angular Application in Docker Container

Now let’s deploy the pokémon explorer application in a docker container. To do so, a custom image has to be created. As the Angular application needs Node.js on a system to perform its tasks, the image has to be based on the Node.js image.

As mentioned earlier, this article uses the pokémon explorer sample for the demonstration. Download it and set it up before proceeding. This sample is written for an article on server-side rendering of Angular applications so that it can be executed as a browser based application and also as a server rendered application.

To run this sample, open a command prompt and navigate to the folder containing the code and run the following commands:

> npm install

> ng serve

Open a browser and change the URL to http://localhost:4200 after running the above commands. You will now see the page rendered. To run it from server, run the following commands:

> npm run build:ssr

> npm run serve:ssr

Open the URL http://localhost:4201 on a browser and you will see the same page getting rendered from the server.

We will build images to deploy the application both ways in a Docker container.

Deploying Browser Rendered Angular App

First, let’s build an image to run the Angular application on the browser. This image will be based on Node.js image, so it will have Node.js and npm installed from the base image. On top of it, it needs to have the commands to copy the code, build it and run it.

The custom image to host the source code can be created using a Dockerfile. A Dockerfile is a text document that contains a list of commands to be executed inside the image. These instructions derive the image from an existing image and then perform tasks like installing any additional software needed, copying the code, setting up the environment for the application and running the application.

This file will be passed as an input to the docker build command to create the image.

Following are the tasks to be performed in the Dockerfile for the custom image:

- Extend from the node:8.9-alpine image

- Copy code of the sample into the image

- Install npm packages

- Build the code using ng build command

- Set the port number to 80 and expose this port for the host system to communicate with the container

- Install http-server package

- Start a server using http-server in the dist/browser folder

Add a new file to the root of the application and name it Dockerfile. Add the following code to this file:

FROM node:8.9-alpine as node-angular-cli

LABEL authors="Ravi Kiran"

# Building Angular app

WORKDIR /app

COPY package.json /app

RUN npm install

COPY . /app

# Creating bundle

RUN npm run build -- --prod

WORKDIR /app/dist/browser

EXPOSE 80

ENV PORT 80

RUN npm install http-server -g

CMD [ "http-server" ]

I used http-server in the above example to keep the demo simple. If you prefer using a web framework like Express, you can add a Node.js server file and serve the application using Express.

Note: Notice that the Dockerfile doesn’t have a command to install Angular CLI globally. This is because the Angular CLI package installed locally in the project can be used using npm scripts. The command for creating application bundle invokes the build script added in package.json.

Now that the Dockerfile is ready, it can be used to create an image. Before doing that, Docker has to be made aware of the files that don’t need to be copied into the image. This can be done using the .dockerignore file.

The .dockerignore file is similar to .gitignore file. It has to contain a list of files and folders to be ignored while copying the application’s code into the image.

Add a file to the root of the project and name it .dockerignore. Add the following statements to this file:

node_modules

npm-debug.log

Dockerfile*

docker-compose*

.dockerignore

.git

.gitignore

README.md

LICENSE

.vscode

dist/node_modules

dist/npm-debug.log

To create an image for this application, run the following command:

> docker build -f .\Dockerfile -t sravikiran/node-angular-cli .

The above command provides two inputs to the docker build command. One is name of the file provided with the -f option and the other is name of the image provided with -t option. You can change these names. The period at the end of the command is the context, which is the current folder. This command logs the status of the statements on the console. It produces the following output:

Figure 5 – Docker build

This command will take a couple of minutes, as it has to install the npm packages of the project and then the global http-server module. The last statement containing the CMD command is used to provide a command to execute in the container. This command doesn’t run unless the container is created.

Once the command finishes execution, you can find the image in the list of images on the system. You will find an entry as shown in the following screenshot:

Figure 6 – Docker image

To create the container using this image, run the following command:

> docker run -d -p 8080:80 sravikiran/node-angular-cli

This command is similar to the command used to run the nginx container earlier. This command runs the application inside the container and makes the application accessible through port 80 of the host system. Open the URL http://localhost:8080 on a browser to see the application.

Though it works, the image of this application takes 349 MB on the host system. This is because, all of the code and npm packages of the application, still live in the container. As the code is running from the dist folder, the source files and the npm packages are not needed in the image.

This can be achieved using multi-step build.

The Dockerfile supports staging builds. The Dockerfile can be used to define multiple images in it and Docker will create the container using the last image in the file. Contents of rest of the images can be used in the last image.

The Image in the Dockerfile created earlier can be divided into two images. One will copy the code and generate the dist folder. The other will copy the dist folder from the first image and start the http-server.

The following snippet shows the modified Dockerfile with two images:

# First stage image labelled as node-angular-cli

FROM node:8.9-alpine as node-angular-cli

LABEL authors="Ravi Kiran"

# Building Angular app

WORKDIR /app

COPY package.json /app

RUN npm install

COPY . /app

RUN npm run build -- --prod

# This image will be used for creating container

FROM node:8.9-alpine

WORKDIR /app

# Copying dist folder from node-angular-cli image

COPY --from=node-angular-cli /app/dist/browser .

EXPOSE 80

ENV PORT 80

RUN npm install http-server -g

CMD [ "http-server" ]

The comments in the above snippet explain the modified parts of the file. Run the following command to create the image:

> docker build --rm -f .\Dockerfile -t sravikiran/pokemon-app

This command has the --rm option in addition to other options and inputs we had in the previous docker build command. This option removes the intermediate images. Otherwise, the image used for staging will stick on the host system. Check the size of this image, it should be around 60 MB, which is significantly smaller than the previous image.

Deploying Server Rendered Angular App

The image file for the server rendered Angular application will have the following differences:

- It will use the build:ssr script to create bundles for the browser and the server

- The compiled server.js file will be used to start the Node.js server

The following snippet shows this image:

FROM node:8.9-alpine as node-angular-cli

LABEL authors="Ravi Kiran"

# Building Angular app

WORKDIR /app

COPY package.json /app

RUN npm install

COPY . /app

RUN npm run build:ssr

FROM node:8.9-alpine

WORKDIR /app

COPY --from=node-angular-cli /app/dist ./dist

EXPOSE 80

ENV PORT 80

CMD [ "node", "dist/server.js" ]

Save this image in a file named Dockerfile-ssr. Run the following command to build the image:

> docker build --rm -f .\Dockerfile-ssr -t sravikiran/pokemon-app-ssr

Once the image is ready, run the following command to run the container:

> docker run -d -p 8080:80 sravikiran/pokemon-app-ssr

If you want to see both browser and the server rendered applications running at the same time, you can change the port number to a number of your choice.

Deploying to Azure

Microsoft Azure supports Docker very well. Azure supports container registry, which is similar to the Docker hub registry, where one can push the images and use them for deployment. Other than this, Azure also supports the Docker container clustering services like Docker Swarm, Kubernetes and DCOS. This tutorial will use the Azure Container Service to push the images and deploy them.

To create a Docker registry, login to Azure portal and choose New > Azure Container Registry and click the Create button in the dialog that appears. Provide inputs in the Create container registry form and click on Create.

Figure 7 – Create container registry

The first field is the name of the registry you would be creating and it has to be unique. Azure will take a couple of minutes to create the container. Once the registry is created, the Azure portal will show an alert and you can click on the link in the alert to see the resource. On the registry resource page, go to the Access Keys tab and enable the Admin user option.

Figure 8 – Registry Access Keys

You will see the passwords of your access keys after enabling the Admin user option. These passwords will be used to login to the registry. To push images to the registry created, Docker client has to be provided access to it. Run the following command to login to the registry:

> docker login <your-registry>.azurecr.io

Running the command will prompt for credentials. Username would be the name of the registry and one of the passwords from the Access Keys tab can be used for password. Figure 9 shows this:

Figure 9 – Logging into registry

After logging in, create a tag for the image to be pushed. The following commands create a tag for the pokemon-app-ssr image and then pushes it to Azure:

> docker tag sravikiran/pokemon-app-ssr:latest raviregistry.azurecr.io/sravikiran/pokemon-app-ssr

> docker push raviregistry.azurecr.io/sravikiran/pokemon-app-ssr

The push command will take a couple of minutes as it has to copy the whole image to the container registry. Once the image is pushed, it can be seen under the repositories tab of the registry, as shown in Figure 10:

Figure 10 – Repositories tab of registry

A web application can be deployed using this image. To do so, choose New > Web Apps for Containers and click the Create button. Provide inputs for the web app. Then choose Configure container and provide details of the registry, image and tag as shown in Figure 11. After filling the details, click on OK and then on the Create button to get the web application created.

Figure 11 – Inputs for web app

Wait for the deployment to complete and then visit the URL of your site. In my case, the URL is http://explorepokemons.azurewebsites.net. Once this page opens on the browser, you will see the server side rendered site.

Figure 12 – Pokémon explorer site hosted on Azure

The same process can be followed to deploy the browser rendered version of the application.

Conclusion

Docker is an excellent platform to deploy applications and Azure has an excellent support for Docker. As this tutorial demonstrated, it is very easy to take a Docker image built from an application (in our case Angular app), push it to an Azure container and get it running. This combination brings developers more control over the environment and it is also quite easy to do.

Let’s use this toolset to build and deploy great applications!

This article was technically reviewed by Subodh Sohoni.

This article has been editorially reviewed by Suprotim Agarwal.

C# and .NET have been around for a very long time, but their constant growth means there’s always more to learn.

We at DotNetCurry are very excited to announce The Absolutely Awesome Book on C# and .NET. This is a 500 pages concise technical eBook available in PDF, ePub (iPad), and Mobi (Kindle).

Organized around concepts, this Book aims to provide a concise, yet solid foundation in C# and .NET, covering C# 6.0, C# 7.0 and .NET Core, with chapters on the latest .NET Core 3.0, .NET Standard and C# 8.0 (final release) too. Use these concepts to deepen your existing knowledge of C# and .NET, to have a solid grasp of the latest in C# and .NET OR to crack your next .NET Interview.

Click here to Explore the Table of Contents or Download Sample Chapters!

Was this article worth reading? Share it with fellow developers too. Thanks!

Rabi Kiran (a.k.a. Ravi Kiran) is a developer working on Microsoft Technologies at Hyderabad. These days, he is spending his time on JavaScript frameworks like AngularJS, latest updates to JavaScript in ES6 and ES7, Web Components, Node.js and also on several Microsoft technologies including ASP.NET 5, SignalR and C#. He is an active

blogger, an author at

SitePoint and at

DotNetCurry. He is rewarded with Microsoft MVP (Visual Studio and Dev Tools) and DZone MVB awards for his contribution to the community