Editorial Note: ASP.NET Core (previously known as ASP.NET vNext, ASP.NET 5 or MVC 6) is a re-implementation of ASP.NET and has been designed to be highly competitive with the popular web frameworks from Node and Go. It unifies previous web technologies like MVC, Web API and Web pages into a single programming framework which was also referred to as MVC 6 previously. ASP.NET Core is based on .NET Core and you can use it to develop and deploy applications to Windows, Linux and Mac OS X.

The latest version of ASP.NET Core 1.1 was released in Nov 2016. In order to try ASP.Net Core 1.1, you first will need to download and install the .NET Core 1.1 SDK. .NET Core 1.1 can be installed on a machine that already has Core 1.0 on it. .NET Core 1.1 apps can run in Visual Studio 2015, Visual Studio 2017 RC, Visual Studio Code and Visual Studio for the Mac.

Once you are ready with the installation, you can start creating new projects with the command line - as in dotnet new. The Visual Studio tooling will still use the 1.0 version (unless you install the new alpha version of the tooling, which includes csproj support), so you will need to migrate 1.0 projects to 1.1.

The migration from ASP.NET Core 1.0 to 1.1 is not too complicated, as described in the official announcement. You basically need to update your project.json file, targeting the version 1.1 of the framework and the NuGet packages. If you are using VS 2015, you might also need to update or create a global.json file that pins the target framework to be used with your project, in case you run into issues with multiple versions installed locally side by side.

The following sections will take a look at most of the changes and features that have been introduced in the new ASP.NET Core 1.1 version.

New features in ASP.Net Core 1.1

Middleware as filters

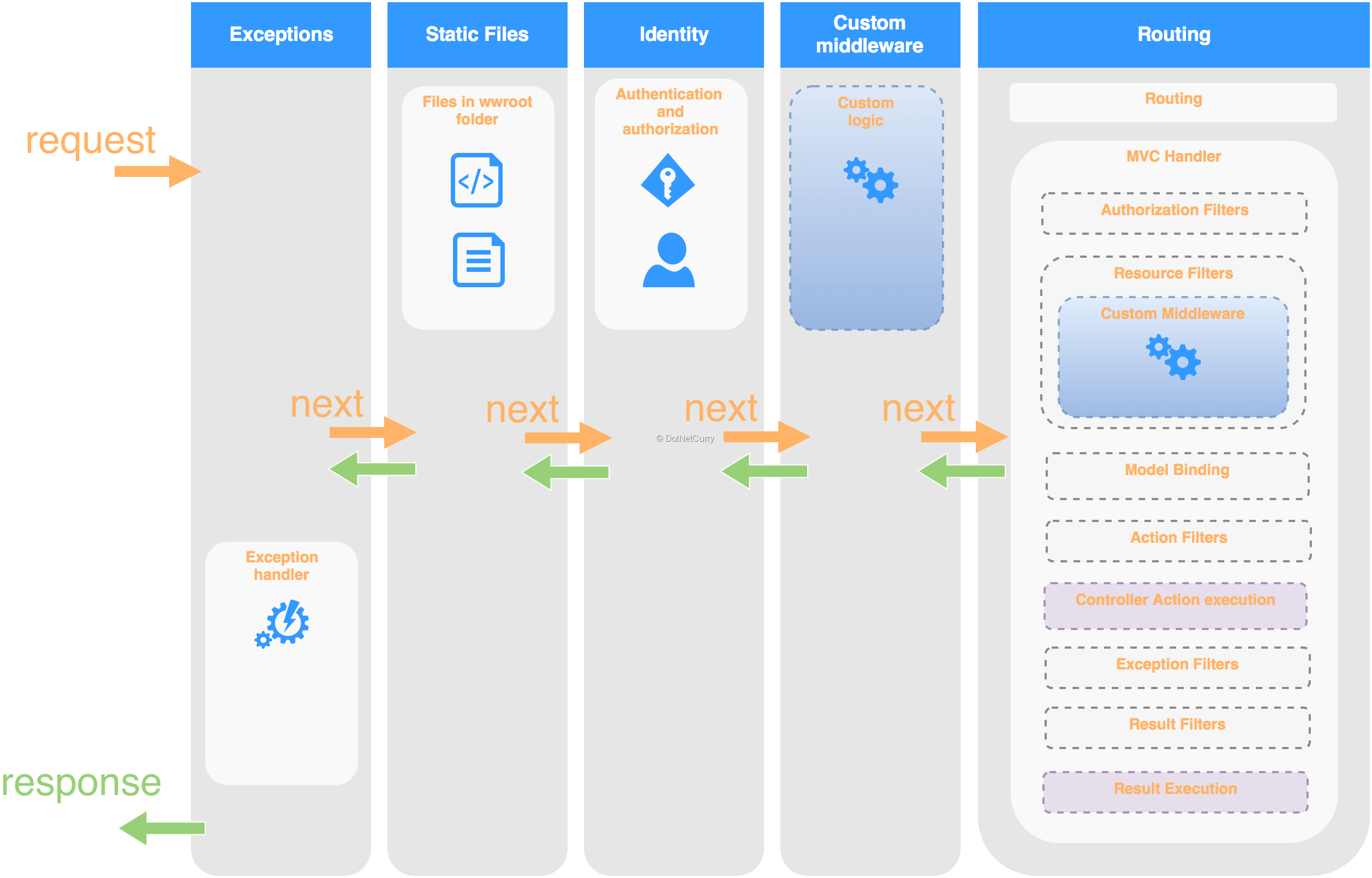

Prior to this feature, you could add your own middleware to the request pipeline which was great but had some limitations:

- It would run for all requests

- You could only place it either before or after Routing/MVC, as MVC has its own specific pipeline triggered by the routing middleware

Figure 1, a typical request pipeline

Being able to add middleware as a filter is a great new feature that gives you additional flexibility in the way the request pipeline is configured, allowing interesting new scenarios.

You can now decide whether to add your middleware as part of the regular pipeline of middleware’s or as part of the MVC specific pipeline:

Figure 2, a typical pipeline including Middleware as Filter

How does it work?

If you check the source code, when a middleware is added as a filter, it is effectively being added as a Resource Filter. This means the middleware executes after routing and authorization but before everything else. You now will be able to:

- Add middleware (your own or 3rd party provided) to particular controllers or actions. For example you could add the new compression middleware only to particular actions.

- Use Route features like the RouteData values extracted by the routing middleware.

The first option means greater flexibility. The second might not seem much, but it makes a great difference in some scenarios.

For example, I wrote an article about Internationalization and Globalization in ASP NET Core which included a request culture provider that extracted the culture from the url. The route was defined using the pattern /{culture}/{controller}/{action} so when a url like /es/Foo/Bar arrived, it would extract the value of the culture route segment (“es”) and then use the default Spanish culture in that request.

I had to create a custom UrlRequestCultureProvider which parsed the url and extracted the value of the culture route segment, just because the RequestLocalization middleware has to be added before the Routing/Mvc middleware.

Now that middleware can be added as filters, it would be possible to add RequestLocalization as a filter and the UrlRequestCultureProvider would only need to get the value of the culture from the RouteData that has already been parsed by the Routing middleware. That is exactly what Microsoft has done when creating its own RouteDataRequestCultureProvider.

How to use it?

Adding middleware as a filter requires 2 steps:

- Creating a Pipeline class. This is a class implementing a single method public void Configure(IApplicationBuilder applicationBuilder) which effectively defines its own mini application pipeline. It’s like a reduced version of Startup.Configure that only adds the middleware that would be run by that filter.

- Adding the filter (locally to some controller/action or globally)

Going back to the Localization article, now you could just add the Localization middleware as a global filter. You also wouldn’t need to create the UrlRequestCultureProvider class, as you can use the out of the box RouteDataRequestCultureProvider.

- Update the ApiPrefixConvention to add the route segment with the key “culture” instead of “language”. (As it’s the default key expected by the new route data request culture provider)

· Remove the UrlRequestCultureProvider class.

· Move the Localization middleware initialization from Startup.Configure to a new class LocalizationFilterPipeline:

public class LocalizationFilterPipeline

{

public void Configure(IApplicationBuilder applicationBuilder)

{

var supportedCultures = new[]

{

new CultureInfo("en"),

new CultureInfo("en-US"),

new CultureInfo("es"),

new CultureInfo("es-ES")

};

var options = new RequestLocalizationOptions

{

DefaultRequestCulture = new RequestCulture("en-US"),

SupportedCultures = supportedCultures,

SupportedUICultures = supportedCultures

};

options.RequestCultureProviders.Insert(0,

new RouteDataRequestCultureProvider() { Options = options });

applicationBuilder.UseRequestLocalization(options);

}

}

- Update Startup.ConfigureServices to add localization as a global filter

public void ConfigureServices(IServiceCollection services)

{

// …

services

.AddMvc(opts =>

{

opts.Conventions.Insert(0, new ApiPrefixConvention());

opts.Filters.Add(

new MiddlewareFilterAttribute(typeof(LocalizationFilterPipeline)));

})

.AddViewLocalization(LanguageViewLocationExpanderFormat.Suffix)

.AddDataAnnotationsLocalization();

}

If you have any trouble, you can check the updated the project in GitHub.

There is one small caveat with the updated solution compared to the original one! Now that the Localization middleware will be run after the authorization filters, anything executed before in the pipeline will always use the default Culture and UICulture! This might or might not be a problem depending on your application, but if you have middleware that needs to support localization or internationalization, you will need to take it into account. Either move that to the filter pipeline or revert to the approach outlined in the original article.

If you are interested, there is a nice in-depth explanation about the inner workings of middleware as filters in Andrew Lock’s blog.

Rewrite Module

There is an entirely new Url Rewrite Middleware that can be used to define URL rewrite rules inside your application instead of defining them externally in the specific hosting solution that you are using. This means your rewrite rules would always work independently of your hosting solution, regardless of whether you choose Docker, IIS or NGINX+Kestrel. (Although there is no hard rule, depending on your requirements you might be better off with rewrite rules inside the app or in the hosting server)

Before you can use it, you will need to install the NuGet package Microsoft.AspNetCore.Rewrite. Once installed, you will be able to add the middleware and configure many rewrite rules using out of the box functionality. The following example is directly taken from the announcement page:

var options = new RewriteOptions()

// Redirect using a regular expression

.AddRedirect("(.*)/$", "$1")

// Rewrite based on a Regular expression

.AddRewrite(@"app/(\d+)", "app?id=$1", skipRemainingRules: false)

// Redirect to a different port and use HTTPS

.AddRedirectToHttps(302, 5001)

// Use IIS UrlRewriter rules to configure

.AddIISUrlRewrite(env.ContentRootFileProvider, "UrlRewrite.xml")

// Use Apache mod_rewrite rules to configure

.AddApacheModRewrite(env.ContentRootFileProvider, "Rewrite.txt");

app.UseRewriter(options);

When you add multiple rewrite rules, it creates a “rewrite pipeline” of sorts. Each rule is evaluated in the order they were defined and each rule can decide whether to terminate the request, skip the following rules or continue executing the next rewrite rule.

Defining custom rewriting rules

When the default rewriting rules are not enough, you can also define your own rules. That means basically implementing the interface IRule .

It defines a single method which takes the RuleContext, basically an object wrapping the HttpContext (for the rule to inspect and/or change), logger, fileProvider and a RuleResult that lets you terminate the request, skip the next rules of continue with the next rules.

public interface IRule

{

void ApplyRule(RewriteContext context);

}

public class RewriteContext

{

public HttpContext HttpContext { get; set; }

public ILogger Logger { get; set; }

public RuleResult Result { get; set; }

public IFileProvider StaticFileProvider { get; set; }

}

For example, let me revisit my previous Vanity Urls in ASP NET Core article and adapt it to use the new rewrite middleware:

- In that article users could define their vanity urls as in /my-vanity-url, which are alternative urls to their profile pages. (By default /profile/details/{id})

- Custom middleware was added to the pipeline, inspecting the request path for vanity urls.

- When a request matched a vanity url, the middleware would overwrite the path in the request object to /profile/details/{id}

- The Routing middleware would run later, which would process the request and use the Details action in the ProfileController.

This can now be implemented using the rewrite middleware and a custom rule. First I will need to implement the new rule for the vanity urls. It will be very similar to the previous VanityUrlsMiddleware, processing the vanity url in the ApplyRule method instead of the Invoke one:

public class VanityUrlsRewriteRule : IRule

{

private readonly Regex _vanityUrlRegex =

new Regex(VanityUrlConstants.VanityUrlRegex);

private readonly string _resolvedProfileUrlFormat;

private readonly UserManager< ApplicationUser > _userManager;

public VanityUrlsRewriteRule(

UserManager< ApplicationUser > userManager,

IOptions< VanityUrlsMiddlewareOptions > options)

{

_userManager = userManager;

_resolvedProfileUrlFormat = options.Value.ResolvedProfileUrlFormat;

}

public void ApplyRule(RewriteContext context)

{

var httpContext = context.HttpContext;

//get path from request

var path = httpContext.Request.Path.ToUriComponent();

if (path[0] == '/')

{

path = path.Substring(1);

}

//Check if it matches the VanityUrl format

//(single segment, only lower case letters, dots and dashes)

if (!_vanityUrlRegex.IsMatch(path))

{

return;

}

//Check if a user with this vanity url can be found

var user = _userManager.Users.SingleOrDefault(u =>

u.VanityUrl.Equals(path, StringComparison.CurrentCultureIgnoreCase));

if (user == null)

{

return;

}

//If we got this far, the url matches a vanity url

//which can be resolved to the profile details page of the user

//Replace the request path so the next middleware (MVC) uses the resolved path

httpContext.Request.Path = String.Format(_resolvedProfileUrlFormat, user.Id);

//Save the user into the HttpContext

//for avoiding DB access in the controller action

httpContext.Items[VanityUrlConstants.ResolvedUserContextItem] = user;

//No need to set context.Result

//as the default value "RuleResult.ContinueRules" is ok

}

}

I would then remove the previous middleware from the pipeline and add the new rewrite middleware using my rule:

var options = new RewriteOptions().Add(

app.ApplicationServices.GetService< VanityUrlsRewriteRule >());

app.UseRewriter(options);

app.UseMvc(…);

If you have any trouble with the code, I have updated the article code in its GitHub repo.

Again, there is one caveat compared with the custom middleware! The IRule does not support async methods so if you really need some IO as part of your rewriting logic you might be better off with a custom middleware. (Be careful and keep an eye on performance in such a scenario anyway)

I still need to get more familiar with the rewrite middleware but right now I would probably use it for rules that don’t involve business logic, the kind of rewrites you would declare in IIS/Apache (like removing trailing slash, use lower case urls, force https, etc). I would still create my own middleware for the rewrites involving business logic, like the vanity urls; if not for anything else, just to retain support for async code. However it also feels a bit of an abuse of the rewrite middleware. As I said, I still need to make my mind about it!

View Components as tag helpers

Prior to ASP.Net Core 1.1, whenever you wanted to invoke a view component from a view, you would write something like:

@await Component.InvokeAsync("LatestArticles", new { howMany = 2 })

There is nothing wrong with it, although it is a much more verbose syntax than for tag helpers for example. It also feels out of place in a razor view that has just html elements and tag helpers. For example, take a look at this snippet mixing html, partials, tag helpers and view components:

<div class="col-md-8 article-details">

@Html.Partial("_ArticleSummary", Model)

<hr />

<markdown source="@Model.Contents"></markdown>

</div>

<div class="col-md-4">

<div class="container-fluid sidebar">

…

<div class="row">

@await Component.InvokeAsync("LatestArticles", new { howMany = 2 })

</div>

</div>

</div>

You will now be able to invoke the view component as tag helpers (Notice the name of the component and parameters is lower-case kebab-case!):

<vc:latest-articles how-many="2"></vc:latest-articles>

This might not seem like a huge change, just a bit of syntactic sugar in the framework. However I think it is great because:

- It’s a simpler syntax

- Tag helpers and view components are used in the same way. They are both close to HTML elements so it helps staying in the HTML editing mode with less mixing of C# code and HTML.

- You get intellisense support, whereas earlier you were on your own using “magic strings”. (Although this requires you to manually install the latest alpha version of the VS tooling, as the current 1.0 tooling won’t provide this intellisense support. Hopefully it should officially arrive to VS2015/2017 soon)

- Once the tooling catches up, it would be able to detect errors with view component names or parameter names/types.

So the same snippet above that mixed partials, tag helpers and view components would now look like this:

<div class="col-md-8 article-details">

@Html.Partial("_ArticleSummary", Model)

<hr />

<markdown source="@Model.Contents"></markdown>

</div>

<div class="col-md-4">

<div class="container-fluid sidebar">

…

<div class="row">

<vc:latest-articles how-many="2"></vc:latest-articles>

</div>

</div>

</div>

I have updated the sample project from the Clean Frontend Code article to use view components as tag helpers. You can check the updated code on GitHub.

View Compilation

You might have already used this technique in previous ASP.NET MVC projects. You basically compile all your razor views as part of your build and deployment process, instead of on demand, as the views are rendered first.

This not only speeds up your initial requests but it also catches some errors in your views at design time, instead of at runtime.

The announcement explains how to wire the view pre-compilation as a postpublish script. This means the views will be precompiled as part of publishing the app.

- Add a reference to the NuGet package Microsoft.AspNetCore.Mvc.Razor.ViewCompilation.Design

- Add a reference to the tool NuGet package Microsoft.AspNetCore.Mvc.Razor.ViewCompilation.Tools

- Add a postpublish script that triggers the dotnet razor-precompile tool

So your project.json might look like this (other sections omitted):

{

"dependencies": {

…

"Microsoft.AspNetCore.Mvc.Razor.ViewCompilation.Design": "1.1.0-preview4-final",

"Microsoft.AspNetCore.Mvc.Razor.ViewCompilation.Tools": "1.1.0-preview4-final"

},

"tools": {

…

"Microsoft.AspNetCore.Mvc.Razor.ViewCompilation.Tools": "1.1.0-preview4-final"

},

"scripts": {

…

"postpublish": [

"dotnet publish-iis

--publish-folder %publish:OutputPath%

--framework %publish:FullTargetFramework%",

"dotnet razor-precompile

--configuration %publish:Configuration%

--framework %publish:TargetFramework%

--output-path %publish:OutputPath%

%publish:ProjectPath%"

]

}

}

Bear in mind that with this setup, when you run your project in VS, the views won’t be precompiled. Only publishing the project will trigger the view pre-compilation.

If you want to see this when locally building the project (so you can detect view errors), I haven’t found a proper solution yet. The only workaround I have found (apart from manually calling it from the command line) is to add a postcompile script:

"postcompile": [ "dotnet razor-precompile

-o %project:Directory%\\bin\\temp

%project:Directory%" ],

Note: Don’t blindly add this workaround to your project! I would leave it commented and just occasionally uncomment whenever I want to check my views locally. Otherwise if you leave it wired all the time, it would disable incremental compilation of your project, increasing build time. Also consider compiling the views to a temp folder so that when commented, the project doesn’t keep using the views precompiled for the last time.

Right now I have found this is way more interesting as part of your Continuous Integration (CI) script, so it detects (some) errors in the views and speeds the first request.

Detecting Errors in View

Before we move on, let me show you what happens when it detects an error in a view:

- Let’s say I misspell the name of a partial view, tag helper parameter or there is some type mismatch. I would then get an error at runtime:

Figure 3, runtime error in a view

- But if I run the pre-compilation, it will detect this and let me know:

1> Running Razor view precompilation.

1>Views\Articles\Details.cshtml(11,34): error CS1061: 'Article' does not contain a definition for 'ContentsAA' and no extension method 'ContentsAA' accepting a first argument of type 'Article' could be found (are you missing a using directive or an assembly reference?)

Response Compression and Caching

These features are many a times added to the web server hosting your application like IIS/Apache, but it is now possible to add them directly to your application.

It also rings nicely into ASP.Net Core capabilities that developers might expect from previous versions (We had the OutputCacheAttribute in previous ASP MVC and if you search in Google you will see plenty of pages explaining how to create a gzip/deflate compression attribute)

Adding response compression

This is as simple as adding the NuGet package Microsoft.AspNetCore.ResponseCompression to your project and updating the Startup class with the compression services and middleware:

// In your Startup.ConfigureServices

services.AddResponseCompression();

// In your Startup.Configure, include as one of the first middlewares

app.UseResponseCompression();

Right now only GZIP compression is supported. However the response compression package allows adding additional ICompressionProvider as part of the options used with services.AddResponseCompression(). If you need to, you could roll your own deflate implementation or wait until someone creates it.

As a quick test, I have captured the html, JS and CSS requests of this project both without and with GZIP response compression:

Figure 4, responses without compression

Figure 5, responses with GZIP compression

Adding output caching

Unsurprisingly, you need to add some new packages to your project, this time the package is named Microsoft.AspNetCore.ResponseCaching.

Then, add the services and middleware to your application:

// In your Startup.ConfigureServices

services.AddResponseCaching();

// In your Startup.Configure

app.UseResponseCaching();

And you are almost good to go. I say almost because the middleware will only cache certain responses, depending on properties of both the request and the response. (Essentially it requires caching being set with the Cache-Control header and it should be safe to cache it)

You can see the actual policy that decides whether a response should be cached or not in the ResponseCachingPolicyProvider of its GitHub repo. At a very high level:

- The request should be either a GET or a HEAD request

- There should be no Authentication header in the request

- The response status code should be 200

- The response should contain a Cache-Control header marked as public. You can do this using the ResponseCacheAttribute or manually as in either of:

-

[ResponseCache(Duration = 60, Location = ResponseCacheLocation.Any)]

HttpContext.Response.GetTypedHeaders().CacheControl = new CacheControlHeaderValue()

{

Public = true,

MaxAge = TimeSpan.FromSeconds(60)

};

- The response Cache-Control header should not contain “no-store” or “no-cache”

- The response should not contain a Vary header with value “*”

- The response should not set any new cookie

If your request and response meet the criteria, then it will be cached by the new middleware. Let’s see an example:

- Add the following controller action and set a breakpoint on it:

[ResponseCache(Duration = 60, Location = ResponseCacheLocation.Any)]

public IActionResult Test()

{

return Json(new { CurrentTime = DateTime.Now.ToString() });

}

- Start the project and send a request from the Chrome console as in $.getJSON('/home/test'). You should get a breakpoint hit.

- Now open a new Chrome incognito window (so it doesn’t share the browser cache with the previous window, you could also use Fiddler) and send the same request from the console. The browser will send the request, but your breakpoint won’t be hit and you will get the same response than in the other window.

You will also notice in the VS output window that the first request hits your controller, while the second request is served from the cache

Figure 6, cache logging output

Cookie storage for TempData

Up until now, TempData only came with session storage support out of the box. Let’s say you wanted to keep some data from the current request so that it’s available in the next request. TempData was a good fit for that scenario but that meant you had to enable sessions.

Any attempt to use TempData without adding session support to your application would result in an InvalidOperationException being thrown with the message “Session has not been configured for this application or request.”

In ASP.Net Core 1.1, you can now support TempData using cookies, wiring the CookieTempDataProvider in your Startup.ConfigureServices:

services.AddSingleton< ITempDataProvider, CookieTempDataProvider >();

Now whenever you add a value to the TempData dictionary, the dictionary will be serialized and encrypted using the Data Protection API, then sent to the browser as a cookie. For example:

Set-Cookie:

.AspNetCore.Mvc.CookieTempDataProvider=CfDJ8P4n6uxULApNkzyVaa34lxdbCG1kNKglCZ4Xzm2xMHd6Uz-nwUbVSnyE_5RhOSGtD9vWLsW_26cBbce2wad6-h89VBSpliZcob9NNMFbbNTSdPktOBExmFtLD6DluoxPdUSPGPCbTv4ysgjzxOdHcBQ7ddfhG_RLkt-4W5VI5vRB; path=/; httponly

The cookie will be automatically sent within the next request, so you will be able to retrieve the same value from the TempData dictionary within the next action:

[HttpPost]

public IActionResult Register()

{

//...

TempData["Message"] = "Thanks for registering!";

return RedirectToAction("Index", "Home");

}

public IActionResult Index()

{

ViewData["Message"] = TempData["Message"];

return View();

}

You might be asking yourself, when is the data removed from the cookie? Well, whenever you read a key from the TempData dictionary, the key is marked for deletion. This is true regardless of the TempData provider you use (cookies or session).

At the end of the request, keys marked for deletion will be removed from the dictionary and the resulting dictionary is again serialized, encrypted and saved as a cookie. For example, if the only key is deleted you will see the following in the response:

Set-Cookie:

.AspNetCore.Mvc.CookieTempDataProvider=; expires=Thu, 01 Jan 1970 00:00:00 GMT; path=/

So TempData behaves the same regardless of which provider (cookies or session) you are using. Data stored in TempData will be alive in the cookie until you read it from the TempData dictionary.

Note: Remember that you can use the methods Peek and Keep, to read without removing, or to keep a key marked for deletion respectively.

Other Features in ASP.NET Core 1.1

What I have talked about until now is not a comprehensive list of all the changes, but I hope it will cover the most interesting changes for ASP.Net developers in general. I will briefly cover additional changes that you might find interesting. (Check the announcement post for more details)

3rd Party Dependency Injection Containers

You must have encountered the built-in dependency injection container if you have ever used ASP.Net Core. This is the API that allows you to register services and automatically resolves object graphs when needed (like instantiating a Controller).

Although you could also wire your preferred 3rd party dependency injection container, this wasn’t as straightforward as it should be. There is a new IServiceProviderFactory interface that should make things easier. It allows you to replace the default container when configuring the WebHostBuilder, right before the Startup class is instantiated and starts registering the services.

Note: Check the sample project they created that shows how to wire Autofac and StructureMap.

Azure

Cloud services in general and Azure in particular keeps receiving attention. A new logging provider for Azure App Services has been introduced, which collects your logging information and makes it available as configured in the Diagnostics section of the App Service Azure portal.

As you may know, the Logging framework allows you to use the ILogging API across your app and configure different providers for capturing the data (like log4net, elmah or now Azure App Services).

An Azure Key Vault Configuration Provider has been added, which allows you to store secrets and keys in Azure and retrieve them as any other Configuration settings in your application. This works just like the other Configuration providers like json files, user secrets or environment variables.

Finally, new Data Protection Key Repositories have been added for Redis and Azure. This allows you to store your data protection keys inside Redis or Azure Blob Storage, so you can easily share the same keys between multiple applications/instances.

Note: If you have multiple instances of your application, sharing the keys is necessary for the authentication cookie or CSRF protection to work across those instances. Otherwise the keys would be different on each instance, so for example instance A won’t be able to understand the authentication cookie or instance B

Windows WebListener server

This is an alternative Windows only server option that runs directly over the Windows Http Server API. (An API that enables Windows application to communicate over HTTP without using IIS)

You might be interested in this new server option if you need Windows specific features (like Windows Authentication) and/or plan to deploy using Windows containers.

Performance

Finally, it is worth highlighting that the performance of the ASP.NET Core framework keeps improving, and as reflected in the round 13 TechEmpower benchmarks recently released.

The results shows ASP.Net Core using Kestrel in Linux being one of the most performant in the Plain Text scenario, and TechEmpower showing its surprise for the great performance improvement shown by the framework (compared to the performance shown by the previous framework in Mono).

Other scenarios like JSON serialization or DB single/multiple queries show there is still room for improvement, but are still very significant improvements over the previous Mono results. You can see the results of all the scenarios in the TechEmpower website. I think you will find them very interesting.

- They specifically highlight ASP.Net Core in their results announcement, dedicating an entire section for it. Even in the summary they wrote: We also congratulate the ASP.NET team for the most dramatic performance improvement we've ever seen, making ASP.NET Core a top performer. (TechEmpower started measuring performances in March 2013, the improvement is compared to previous results by Mono in round 11)

- The ASP.Net team proudly states in the 1.1 announcement blogpost: the performance of ASP.NET Core running on Linux is approximately 760 times faster than it was one year ago.

If you want to see the exact source code of the ASP.Net Core applications, check Tech Empower which publishes the source code of all the applications in GitHub that participated in the benchmark. For ASP.Net Core check these.

Overall the ASP.NET team has been working hard on improving the performance and making sure the new framework is fast. Luckily for us, their new open approach means we get to see their discussions, for example check this GitHub issue about decreasing memory consumption in .Net 1.1

Conclusion

As you can see, ASP.Net Core keeps maturing and receiving new functionality. There are still issues which you might expect from such an evolving framework, for example installing and migrating to version 1.1 isn’t as smooth as one could expect. The tooling is also behind when it comes to the newer features.

You still need to carefully decide if you will use it on your next project, as there is definitely a price to pay for the new features, improved flexibility, performance and multiplatform capabilities. Depending on the team and project, you might not want to discard the full .Net Framework yet.

In any case, it is great seeing how things are falling into place. ASP.Net Core performance keeps getting better, Microsoft joined the Linux Foundation, Google Cloud joined the .NET Foundation Technical Steering Group, Visual Studio is finally coming to Mac…Check the entire list of new features in What’s Brewing for .NET Developers.

These are exciting times for us developers!

This article has been editorially reviewed by Suprotim Agarwal.

C# and .NET have been around for a very long time, but their constant growth means there’s always more to learn.

We at DotNetCurry are very excited to announce The Absolutely Awesome Book on C# and .NET. This is a 500 pages concise technical eBook available in PDF, ePub (iPad), and Mobi (Kindle).

Organized around concepts, this Book aims to provide a concise, yet solid foundation in C# and .NET, covering C# 6.0, C# 7.0 and .NET Core, with chapters on the latest .NET Core 3.0, .NET Standard and C# 8.0 (final release) too. Use these concepts to deepen your existing knowledge of C# and .NET, to have a solid grasp of the latest in C# and .NET OR to crack your next .NET Interview.

Click here to Explore the Table of Contents or Download Sample Chapters!

Was this article worth reading? Share it with fellow developers too. Thanks!

Daniel Jimenez Garciais a passionate software developer with 10+ years of experience who likes to share his knowledge and has been publishing articles since 2016. He started his career as a Microsoft developer focused mainly on .NET, C# and SQL Server. In the latter half of his career he worked on a broader set of technologies and platforms with a special interest for .NET Core, Node.js, Vue, Python, Docker and Kubernetes. You can

check out his repos.