Code Quality is a loose approximation of writing useful, correct and maintainable code. Writing good quality code helps in reducing bugs at a later stage in product development. Visual Studio provides many built-in tools for writing quality code.

This article is published from the DNC Magazine for Developers and Architects. Download this magazine from here [PDF] or Subscribe to this magazine for FREE and download all previous and current editions.

This article will discuss various tools available to developers for testing using Visual Studio. There are tools like Code Analysis, Code Metrics, IntelliTrace, and Code Profiling which can be used to deliver high quality code. Writing unit tests, using IntelliTest, finding Code Coverage will also result in writing qualitative code. We will also see how to write Unit Test with MS Framework, third party testing, as well as writing IntelliTest. Later we will discuss how Code Coverage helps in unit testing and take a brief overview of Code Analysis, Maintainability Index, IntelliTrace and Code Profiling

Once the code is written, unit testing helps developers find logical errors in the code. In some cases, Test Driven Development (TDD) approach is used. In TDD, the test is written first and then the code is written for the test method.

We will discuss how to create unit test for code which is already available. Download and install Visual Studio 2015 if you haven’t already done so.

Testing Related Tools for Developers

Creating Unit Tests

1. Create a solution and add a class library with some functionality to it. We will write unit tests for the methods in the class library.

2. Add Unit Test Project to the current solution. Select a method for which we need to create a unit test, right click and select Create Unit Tests

3. Select the option of new Test Project. The Unit Test Project is added, reference set to the class library and you can select Empty body, throw exception or add the statement for Assert.Failure as shown in this figure.

4. For this example, select the default Assert failure statement and the stub for Test Method looks as follows

5. The class gets an attribute as TestClass, and the method gets attribute as TestMethod. Without any of these attributes, the test method will be ignored.

6. Let us add code to test the method. You can run the test using Test Explorer. If it is not visible, go to Test – Windows – Test Explorer to view it. Select the test method, right click and choose run.

The test passes.

7. There are four additional attributes for initializing and cleaning up for class or test. ClassInitialize and ClassCleanup methods will be executed when you run the first test in the class, and when you finish running the last test in the class. Similarly TestInitialize and TestCleanup methods are called before test execution and after test running.

Convert Unit Test to Data Driven Unit Test

In the previous example, the test method took the same set of parameters and gave the same result. In a real life scenario, it’s better to change parameters on the fly. In order to achieve this, we need to convert this test method to data driven test. The data can be provided via xml file, csv file or even via database.

1. Add a New Item, select Data tab and select XML file. Change the name of the XML file to anything you wish. Go to properties of the file, and change the Copy to Output Directory to Copy if newer

2. Provide data for parameters along with the expected result.

3. Now we need to add a DataSource parameter to the method, the code looks as follows. Add a reference to System.Data assembly, and add the code for TestContext.

4. Run the test and observe that it gets executed three times, same as the number of records in the data file.

A Data Driven Unit test can pass different set of parameters every time it executes.

IntelliTest

Visual Studio offers a useful utility in the name of IntelliTest (formerly called as SmartTest). With this tool, you can find out how many tests are passing or failing. You can also provide the code to fix issues. Writing an exhaustive test suite for a very complex piece of code, requires a lot of efforts. There is a tendency to omit some test data which may lead to bugs getting captured at a far later stage. IntelliTest takes care of this problem. It will help in early detection of bugs, and lead to better qualitative code.

1. Right click on the class and select Run IntelliTests option

2. It will create a Test Suite for all the methods in the class, and generate data. Every code is analysed depending upon any if statements, loops. It shows what kind of exceptions will be thrown.

If we select the test case, it shows us the details and how code can be added. In this example, Divide by zero exception can be handled by adding an attribute. We can add the necessary code.

3. We have the option of actually creating a Test Project and adding all the test methods to it. IntelliTest adds Pex attributes to the code, as can be seen from following image

IntelliTest when created, tries to find the path for high code coverage. Let us find out how Code Coverage will help in increasing Code Quality.

Analyzing Code Coverage

Code Coverage determines which code is getting executed when the unit test is run. If there are complex and multiple lines of code, Code Coverage will help in finding out if a portion of the code is not being tested at all. We can take necessary action based on these findings. Code coverage can be computed when you are executing tests using Test Explorer.

1. From Test Explorer, select the unit tests to be executed, right click and select “Analyse Code Coverage for Selected Tests”

2. The Code Coverage Results can be seen as follows:

3. If we navigate to the actual assembly, we can see the blue and red coloured code which indicates if the code was executed or not.

4. Code Coverage is measured in blocks. A block is a code which has only one entry and exit point. If the control passes through a block, it is considered as covered. You can also add columns by right clicking the assembly, and selecting Add/Remove Columns

5. Select lines covered with their percentage.

6. The complete data will be shown with Code Coverage Results.

7. In order to view the previous results, Import the results. To send the results to someone, export it.

Third Party Testing Framework

At times, we can use a third party testing framework for unit testing. The default testing framework available with Visual Studio is MSTest. You can download and install various third party testing framework with Visual Studio.

1. Select Tools > Extensions and Updates and Online > Visual Studio Gallery tab.

2. Type the name of the framework in the search box.

3. Download and install the NUnit framework.

4. Select the Test Project, right click and select Manage NuGet Packages. Find NUnit and Install. A reference to nunit.framework.dll gets automatically added.

5. Add a new Item of type unit test, and change the code to the following:

6. Execute test from the Test Explorer.

Until now, we have seen all the testing related tools for developers. These tools help in identifying bugs at an early stage thereby improving overall code quality.

Now let us delve into tools which will help developers write better quality code.

Tools to write Quality Code

Code Analysis

The code being written needs to follow certain rules. The rules can be according to the organization’s standards, or certain standards enforced by the customer for whom the application is being developed. Code Analysis (also called as Static Code Analysis) helps in finding out if there are areas in the code not following a set of accepted rules. This tool is a part of Visual Studio, and can be applied to any project. We can specify that code analysis is to be enabled at the time of build, or it can be applied as and when required.

Visual Studio provides a lot of rule sets like Microsoft All Rules, Microsoft Basic Correctness Rules, and Microsoft Basic Design Guideline Rules etc. Using these rulesets, either one or multiple rulesets can be applied to a project. The default behaviour gives warnings if the rules are not adhered to.

1. In order to see the rulesets, right click on the project to apply code analysis, and select properties. Select the tab for Code Analysis and click on the drop down to get a list of rulesets

2. You can also right click on project and select Analyze > Run Code Analysis.

Click on Open to open the selected ruleset.

Observe the check box to Enable Code Analysis on Build.

3. Various rules are displayed for each category. We can change any of these rules and create a copy of the ruleset. We cannot modify the existing ruleset, but the copied one can be edited as required. Open the ruleset and expand one of the categories

4. Actions can be applied to any rule.

For most of the rules, we get a warning if the rule is not followed. By changing it to error, we can ensure that the developer cannot ignore the rule, as its a human tendency to ignore any warnings.

5. After changing one or multiple rules in a ruleset, we can provide a name for it, and save this copy of ruleset for future reference. Later this set can be applied to a project, to get the errors and or warnings as set earlier.

Note: Code Analysis was previously known as FxCop. This tool helps in finding out if there are any programming related issues in the code.

Code Metrics

Visual Studio can measure maintainability index for the code. It also finds the complexity of the code. As the name suggests, Code Metrics provides information if the code is maintainable or not. Originally this algorithm was developed by Carnegie-Mellon University. Microsoft adopted this and incorporated it as a part of Visual Studio from Visual Studio version 2008 onwards. It evaluates code based upon four criterias - Cyclomatic Complexity, Depth of Inheritance, Class Coupling and Lines of Code.

Cyclomatic Complexity is based on loops and various decisions in code. Class Coupling finds the number of dependencies on other classes. More the class coupling, lower is the index. Depth of Inheritance is for inheritance of classes from the Object class. Lines of code is the actual number of executable lines. The index is between the range of 0 to 100. 0 to 9 is low, 10 to 19 is moderate and 20 onwards is high. The higher the maintainability index, the better are chances of maintaining it.

For using this tool, you only need to select the project or complete solution, right click and select Calculate Code Metrics. The results will be shown with the classification of parameters mentioned.

IntelliTrace

This tool was introduced by Microsoft in Visual Studio 2010. It was originally called as historical debugger as it gives history about the debugging parameters and variables. Every developer needs to debug as a part of the routine. Sometimes it takes a lot of time to go through the steps till the breakpoint is hit. If a developer doesn’t know the code well or doesn’t remember the breakpoints, then debugging can prove to be a very time consuming process.

IntelliTrace helps in minimizing the time for debugging.

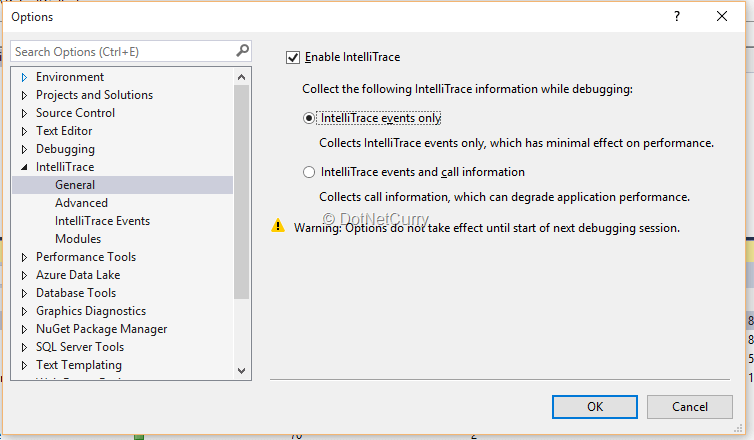

By default, IntelliTrace is enabled, we can select it from Tools > Options and selecting IntelliTrace tab

There are 2 ways with which IntelliTrace information can be gathered - by collecting only events information, or collecting events and calls information. We can also select the event information that needs to be captured. The more the number of events captured, the more information we get, but the overall performance of the application will be hampered.

Intellitrace creates .ITrace file which will store complete information about events and calls. This file gets automatically deleted once the instance of Visual Studio is closed. We can also change the location of .ITrace file and store it for further usage. This file can be opened by Visual Studio, and will give a complete history of debugging to your colleague in case you need any help.

IntelliTrace file can be created by using Microsoft Test Manager, and also at production level.

Code Profiling

We use Code Profiling for finding out performance related issues in an application. It is mandatory to have .pdb files while profiling code. There are three ways with which performance data can be collected. These are Sampling, Instrumentation and Concurrency.

You can also use Sampling or Instrumentation method to collect data about memory allocation. To start profiling, you can use the performance wizard which can be started from Debug > Performance > Performance Explorer > New Performance Session. The properties of the performance session will provide collecting parameters.

Conclusion

This article discussed various Testing Tools from a Developer’s perspective. We discussed how to create unit tests and convert it to data driven test. Data Driven Tests are useful for executing multiple sets of parameters, and find the result. We discussed the importance of IntelliTest, how to include and write unit tests for third party framework. We also discussed how to find out the code covered during execution of tests. We have seen how tools like Code Analysis, Code Metrics help in writing better quality code. IntelliTrace helps in debugging and gives us historical data for debugging. The Code Profiling tools help in analysing performance issues for an application.

These set of tools in Visual Studio 2015 can provide developers better insights into the code they are developing, and help them identify potential risks and fix them, in order to create quality, maintainable code.

This article has been editorially reviewed by Suprotim Agarwal.

C# and .NET have been around for a very long time, but their constant growth means there’s always more to learn.

We at DotNetCurry are very excited to announce The Absolutely Awesome Book on C# and .NET. This is a 500 pages concise technical eBook available in PDF, ePub (iPad), and Mobi (Kindle).

Organized around concepts, this Book aims to provide a concise, yet solid foundation in C# and .NET, covering C# 6.0, C# 7.0 and .NET Core, with chapters on the latest .NET Core 3.0, .NET Standard and C# 8.0 (final release) too. Use these concepts to deepen your existing knowledge of C# and .NET, to have a solid grasp of the latest in C# and .NET OR to crack your next .NET Interview.

Click here to Explore the Table of Contents or Download Sample Chapters!

Was this article worth reading? Share it with fellow developers too. Thanks!

Gouri is a Trainer and Consultant on Azure DevOps and Azure Development. She has an experience of three decades in software training and consulting. She is a graduate from Pune University and PGDCA from Pune University. Gouri is a Microsoft Most Valuable Professional (MVP) - Developer Technologies (Azure DevOps), Microsoft Certified Trainer (MCT) and a Microsoft Certified Azure DevOps Engineer Expert. She has conducted over 150 corporate trainings on various Microsoft technologies. She is a speaker with Pune User Group and has conducted sessions on Azure DevOps, SQL Server Business Intelligence and Mobile Application Development. Gouri has written more than 75 articles on Azure DevOps, TFS, SQL Server Business Intelligence and SQL Azure which are published on

www.sqlservercurry.com and

www.dotnetcurry.com. You can connect with her on

LinkedIn.