In my recent experiments with Azure Storage, I found out that Azure Blob items are protected by default and unless you make them available explicitly, they cannot be accessed. However opening up Blob access to the whole wide world can be a big bag of hurt, especially since you pay for data transfer on Azure.

Today we’ll see how we can open up access to blob contents selectively by using a built in mechanism in Azure Storage referred to as Shared Access Signatures. Thanks a ton to Sumit Maitra for helping me out understanding some crucial bits of this technology.

What are Shared Access Signatures

Share Access Signatures are conceptually similar to OAuth access tokens. A common example to explain the scenario is having a padlock and multiple keys with multiple key holders. Say you have padlocked a fence and you know there are two other people who have keys to the lock, you believe that only two people are going to use the key. But who is using it is never clear. As opposed to having RFID based locks where every user has a uniquely identifiable RFID key, so you can easily determine who used the key where and if the Key Card gets lost, only that particular card’s access can be revoked permanently.

OAuth uses a similar principal by issuing Application based Authentication token so that the provider knows exactly who is trying to log in and how. Similarly, Shared Access Signatures are pass-phrases offered to reliable ‘users’. How and when your application offers them depends on your app. Similarly when a client presents the pass-phrase, it is up to your application to validate it and then offer access.

Today we’ll see how we obtain Share Access Signatures from Azure Blob Storage and then use it to provide selective access. But before that let’s setup some sample data first.

Setting up our Azure Blob Storage

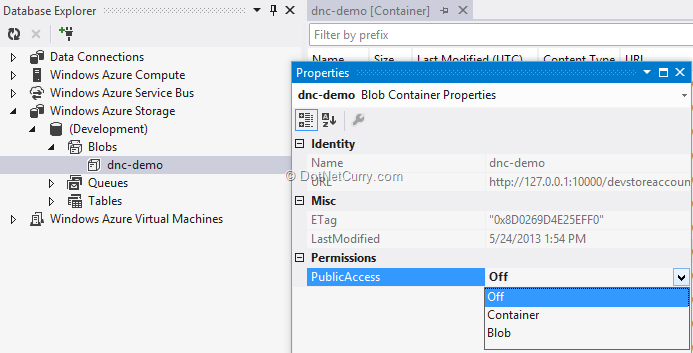

We won’t build a fancy application, instead simply start our Storage Emulator and start up Visual Studio. Without even creating a solution, we can expand Database Explorer navigate to the Blobs node, right click on it and create a new Blob Container…

We’ll call the container dnc-demo

Next we’ll double click on the new container to bring up the container

Now we’ll upload a bunch of images in this container. Our container looks as follows:

Now if we try to access any of the above URL in a browser, we’ll get this (404 Not Found).

The one way to fix it is make it publicly available by turning the Public-Access permission from Off to Container as shown below.

Once we do this, the exact URL of the container item is now public and potentially accessible to everyone on earth who has an internet connection. Given that outgoing data transfer from you costs a ‘pretty-penny’, even if the document was not a top secret, the mere fact that it can be downloaded by people who don’t care but add to your cost, is a point of concern. Let’s see how we can overcome this.

Storage Access Signatures

Azure Storage (Blobs/Queues/Tables) allow you to define Access policies that enable temporary access to private resources in the storage items. The policy is in form of a set of URL parameters in a query string. When the query string is appended to the original URL of the Storage Item, Azure Storage verifies the validity of the policy and allows access based on the validity of the policy and permissions enabled.

Generating Storage Access Signatures

Storage Access Signatures can be generated at the container level or at the blob level. The access permissions are different at different levels. (Source: http://msdn.microsoft.com/en-us/library/windowsazure/hh508996.aspx )

At Container Level

1. Read Blob – Read the content, properties, metadata or block list of any blob in the container. Use any blob in the container as the source of a copy operation.

2. Write Blob – For any blob in the container, create or write content, properties, metadata, or block list. Snapshot or lease the blob.

3. Delete Blob – Delete any blob in the container. However you cannot give permissions to delete the container itself

4. List – It allows listing of all the blobs in the given container.

At the Blob Level

1. Read – Read the content, properties, metadata and block list. Use the given blob as the source of a copy operation.

2. Write – Create or write content, properties, metadata, or block list. Snapshot or lease the blob. Resize the blob (page blob only).

3. Delete – Delete the blob.

Today we’ll see how we can generate Signatures at the Container level and use it to access blobs in it.

The Sample

In our Sample application, we will attempt to list all the files in a particular blob container with the following aim:

1. Not reduce security at the container level by opening up “Public Read Access”

2. Have a URL to our Blob content that expires

3. Have control over whom we issue the Valid URLs to

References and Setup

Step 1: We create a basic ASP.NET MVC 4 web application and add Azure Storage and Azure Configuration Manager dependencies from Nuget

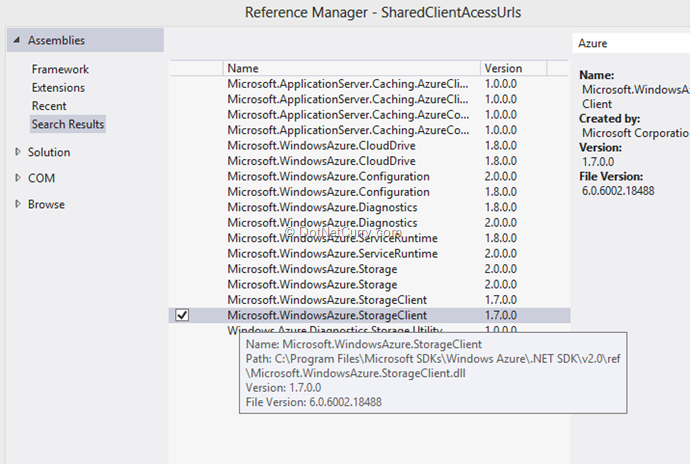

Step 2: Next we add the Azure Storage Client library from the References dialog

As you can see above if you have the latest Azure SDK installed you’ll see two StorageClient references. I picked the one in the …\v2.0\... folder.

Creating the Storage Access URL

Step 3: We add a class AzureBlobSA. This will be static class with helper methods for getting a SAS Url as well as returning URL for a file with Security information appended.

GetSASUrl – This method takes in the name of the Blob Storage container and creates a new SharedAccessPolicy called twoMinutePolicy. The policy grants access to all blobs in the given container with Read/Write permissions for 2 minutes. To adjust for possible clock time differences, the Start Time is set to 1 minute before current time, and two minutes from Current Time giving it a windows of three total minutes. The new policy is applied to the said container and the signature string is returned by the method.

public static string GetSASUrl(string containerName)

{

CloudStorageAccount storageAccount =

CloudStorageAccount.Parse(

ConfigurationManager.AppSettings["StorageAccountConnectionString"]);

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobClient.GetContainerReference(containerName);

container.CreateIfNotExist();

BlobContainerPermissions containerPermissions = new BlobContainerPermissions();

containerPermissions.SharedAccessPolicies.Add(

"twominutepolicy", new SharedAccessPolicy()

{

SharedAccessStartTime = DateTime.UtcNow.AddMinutes(-1),

SharedAccessExpiryTime = DateTime.UtcNow.AddMinutes(2),

Permissions = SharedAccessPermissions.Write | SharedAccessPermissions.Read

});

containerPermissions.PublicAccess = BlobContainerPublicAccessType.Off;

container.SetPermissions(containerPermissions);

string sas = container.GetSharedAccessSignature(new SharedAccessPolicy(),

"twominutepolicy ");

return sas;

}

GetSasBlobUrl – This method takes the Blob Container name, the File in the Blob storage and the Secure Access auth string to create a URL that is valid for the period of the Security access policy’s duration

public static string GetSasBlobUrl(string containerName, string fileName, string sas)

{

CloudStorageAccount storageAccount =

CloudStorageAccount.Parse(

ConfigurationManager.AppSettings["StorageAccountConnectionString"]);

StorageCredentialsSharedAccessSignature sasCreds = new

StorageCredentialsSharedAccessSignature(sas);

CloudBlobClient sasBlobClient = new CloudBlobClient(storageAccount.BlobEndpoint,

new StorageCredentialsSharedAccessSignature(sas));

CloudBlob blob = sasBlobClient.GetBlobReference(containerName + @"/" + fileName);

return blob.Uri.AbsoluteUri + sas;

}

There are two more helper methods EncodeTo64 and DecodeFrom64 that encode/decode a file name to/from base 64 encoded string. We’ll see their utility shortly.

The Controller and View

Step 4: I am going with the barebones here for demo purposes so apologize for the lack of HTML panache! We add a HomeController class that has two action methods – Index and GetImage. The Index method Return a list of File Names in the Blob container. Note these are just the names not the complete URLs because the direct URLs are useless since they are not available to the public.

Apart from stuffing the List of Names in the ViewBag, we also generate the Storage Access URL and put it in the ViewBag.

public ActionResult Index()

{

IEnumerable<IListBlobItem> items = AzureBlobSA.GetContainerFiles("dnc-demo");

List<string> urls = new List<string>();

foreach (var item in items)

{

if (item is CloudBlockBlob)

{

var blob = (CloudBlockBlob)item;

urls.Add(blob.Name);

}

}

ViewBag.BlobFiles = urls;

ViewBag.Sas = AzureBlobSA.GetSasUrl("dnc-demo");

return View();

}

The Index View

The View simply takes each file name from the blob container, in the ViewBag and creates an Action Link out of it. In the Action Link it adds the File Name as the id parameter, also note the file name is first Base64 encoded. This is not for security mechanism, rather it converts a file name to a string that can be passed as parameter for an Action method. If IIS detects a file name at the end or a URL, it hijacks the call and returns a 404 if that file is not found. So instead of passing file name, we base64 encode it.

The final query string parameter in the Action link is the Storage Access string that we obtained from the Controller. It is posted back as the sas parameter.

We also pass the target as _blank so that each URL opens in a new Window.

@{

ViewBag.Title = "Index";

}

<h2>Items in Blob Container</h2>

<h3>The SAS Key: @ViewBag.Sas</h3>

<ul>

@foreach (string url in ViewBag.BlobFiles)

{

<li>

@Html.ActionLink(url, "GetImage", new

{

id = SharedClientAcessUrls.Lib.AzureBlobSA.EncodeTo64(url),

sas = @ViewBag.Sas

},

new

{

target = "_blank"

})

</li>

}

</ul>

If we run the application, the View is as follows

We have the SAS Key and the list of items in the Blob Container.

Now if we hover over any of the file names, we’ll see instead of having the URL to the blob container, it is a URL that submits data to the GetImage action method. The first parameters is the file name, Base64 encoded and the second parameter is the HTML Encoded sas key. Now let’s see what the GetImage action method does

The GetImage Action method

The GetImage action method receives the file name as base64 encoded and the sas string. It decodes the base 64 string back to the file name and calls the GetSasBlobUrl method in our AzureBlobSA static class to get the URL with the permissions attached to it.

public ActionResult GetImage(string id)

{

string fileName = AzureBlobSA.DecodeFrom64(id);

return new RedirectResult(

AzureBlobSA.GetSasBlobUrl("dnc-demo", fileName, Request.QueryString["sas"]));

}

The URL is then fed to the RedirectResult action which takes the user to this new URL.

.

.

Now if the end user wants to distribute this URL, they technically can, but remember that after 2 minutes this link would have expired and once it does, the end user will receive an output similar to the one below.

So by now we have achieved two out of the three goals we had set out to achieve i.e.

1. We have not changed the permissions on the Blob Container

2. We have got a URL that gives access to the items in the Blob container but it expires after a preset period of time.

3. Our final goal is control access to the URL all-together. This is easy to do, all we have to do is ensure that the HomeController is protected with the [Authorize] attribute that will require the user to present some kind of authentication token to be able to get to the List of files itself. If we want to be a little lenient, we could show them the list of files and put an [Authorize] attribute on the GetImage action method, instead. Either ways, using conventional access control methods along with Share Access Signatures we can control what kind of access we want to provide to our Azure Storage Blob items.

Conclusion

That wraps up this introduction to Share Access Signatures for Azure Blob Storage items. We saw how we could protect our Azure Blob items from direct access. In future we’ll dig deeper to see how we can use access to upload/download data from other client applications. Another topic worth investigating is how to revoke access that has already been granted. All that for another day, hope you enjoyed this article.

Download the entire source code of this article (Github)

This article has been editorially reviewed by Suprotim Agarwal.

C# and .NET have been around for a very long time, but their constant growth means there’s always more to learn.

We at DotNetCurry are very excited to announce The Absolutely Awesome Book on C# and .NET. This is a 500 pages concise technical eBook available in PDF, ePub (iPad), and Mobi (Kindle).

Organized around concepts, this Book aims to provide a concise, yet solid foundation in C# and .NET, covering C# 6.0, C# 7.0 and .NET Core, with chapters on the latest .NET Core 3.0, .NET Standard and C# 8.0 (final release) too. Use these concepts to deepen your existing knowledge of C# and .NET, to have a solid grasp of the latest in C# and .NET OR to crack your next .NET Interview.

Click here to Explore the Table of Contents or Download Sample Chapters!

Was this article worth reading? Share it with fellow developers too. Thanks!

Suprotim Agarwal, MCSD, MCAD, MCDBA, MCSE, is the founder of

DotNetCurry,

DNC Magazine for Developers,

SQLServerCurry and

DevCurry. He has also authored a couple of books

51 Recipes using jQuery with ASP.NET Controls and

The Absolutely Awesome jQuery CookBook.

Suprotim has received the prestigious Microsoft MVP award for Sixteen consecutive years. In a professional capacity, he is the CEO of A2Z Knowledge Visuals Pvt Ltd, a digital group that offers Digital Marketing and Branding services to businesses, both in a start-up and enterprise environment.

Get in touch with him on Twitter @suprotimagarwal or at LinkedIn