In a world where most developers and organizations have come to accept the constant churn of new tools, architectures and paradigms, containerized applications are slowly becoming a lingua franca for developing, deploying and operating applications. In no small part, this is thanks to the popularity and adoption of tools like Docker and Kubernetes.

It was back in 2014 when Google first announced Kubernetes, an open source project aiming to automate the deployment, scaling and management of containerized applications. With its version 1.0 released back in July 2015, Kubernetes has since experienced a sharp increase in adoption and interest shared by both organizations and developers.

Refreshingly, both organizations and developers seem to share the growing interest in Kubernetes. Surveys note how interest and adoption across the enterprise continues on the rise. A the same time, the latest Stack Overflow’s developer survey rates Kubernetes as the 3rd most loved and most wanted technology (with Docker rating even better!).

But none of this information will make it any easier for newcomers!

In addition to the learning curve for developing and packaging applications using containers, Kubernetes introduces its own specific concepts for deploying and operating applications.

The aim of this Kubernetes tutorial is to guide you through the basic and most useful Kubernetes concepts that you will need as a developer. For those who want to go beyond the basics, I will include pointers for various subjects like persistence or observability. I hope you find it useful and enjoy the article!

Kubernetes architecture

You are not alone if you are still asking yourself What is Kubernetes?

Let’s begin the article with a high-level overview of the purpose and architecture of Kubernetes.

If you check the official documentation, you will see the following definition:

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation

In essence, this means Kubernetes is a container orchestration engine, a platform designed to host and run containers across a number of nodes.

To do so, Kubernetes abstracts the nodes where you want to host your containers as a pool of cpu/memory resources. When you want to host a container, you just declare to the Kubernetes API the details of the container (like its image and tag names and the necessary cpu/memory resources) and Kubernetes will transparently host it somewhere across the available nodes.

In order to do so, the architecture of Kubernetes is broken down into several components that track the desired state (ie, the containers that users wanted to deploy) and apply the necessary changes to the nodes in order to achieve that desired state (ie, adds/removes containers and other elements).

The nodes are typically divided in two main sets, each of them hosting different elements of the Kubernetes architecture depending on the role these nodes will play:

- The control plane. These nodes are the brain of the cluster. They contain the Kubernetes components that track the desired state, the current node state and make container scheduling decisions across the worker nodes.

- The worker nodes. These nodes are focused on hosting the desired containers. They contain the Kubernetes components that ensure containers assigned to a given node are created/terminated as decided by the control plane

The following picture shows a typical cluster, composed of several control plane nodes and several worker nodes.

Figure 1, High level architecture of a Kubernetes cluster with multiple nodes

As a developer, you declare which containers you want to host by using YAML that follow the API. The simplest way to host the hello-world container from Docker would be declaring a Pod (more on this as soon as we are done with the introduction).

This is something that looks like:

apiVersion: v1

kind: Pod

metadata:

name: my-first-pod

spec:

containers:

- image: hello-world

name: hello

The first step is getting the container you want to host, described in YAML using the Kubernetes objects like Pods.

Then you will need to interact with the cluster via its API and ask it to apply the objects you just described. While there are several clients you could use, I would recommend getting used to kubectl, the official CLI tool. We will see how to get started in a minute, but an option would be saving the YAML as hello.yaml and run the command

kubectl apply -f hello.yaml

This concludes a brief and simplified overview of Kubernetes. You can read more about the Kubernetes architecture and main components in the official docs.

Kubernetes – Getting started

The best way to learn about something is by trying for yourself.

Luckily with Kubernetes, there are several options you can choose to get your own small cluster! You don’t even need to install anything on your machine. If you want to, you could just use an online learning environment like Katacoda.

Setup your local environment

The main thing you will need is a way to create a Kubernetes cluster in your local machine. While Docker for Windows/Mac comes with built-in support for Kubernetes, I recommend using minikube to setup your local environment. The addons and extra features minikube provides simplifies many of the scenarios you might want to test locally.

Minikube will create a very simple cluster with a single Virtual Machine where all the Kubernetes components will be deployed. This means you will need some container/virtualization solution installed on your machine, of which minikube supports a long list. Check the prerequisites and installation instructions for your OS in the official minikube docs.

In addition, you will also need kubectl installed on your local machine. You have various options:

- Install it locally in your machine, see the instructions in the official docs.

- Use the version provided by minikube. Note that if you choose this option, when you see a command like kubectl get pod you will need to replace it with minikube kubectl — get pod.

- If you previously enabled Kubernetes support in Docker, you should already have a version of kubectl installed. Check with kubectl version.

Once you have both minikube and kubectl up and running in your machine, you are ready to start. Ask minikube to create your local cluster with:

$ minikube start

...

Done! kubectl is now configured to use "minikube" by default

Check that everything is configured correctly by ensuring your local kubectl can effectively communicate with the minikube cluster

$ kubectl get node

NAME STATUS ROLES AGE VERSION

minikube Ready master 9m58s v1.19.2

Finally, let’s enable the Kubernetes dashboard addon.

This addon deploys to the minikube cluster the official dashboard that provides a nice UX for interacting with the cluster and visualize the currently hosted apps. (even though we will continue to use kubectl through the article).

$ minikube addons enable dashboard

...

The 'dashboard' addon is enabled

Finally open the dashboard by running the following command on a second terminal

$ minikube dashboard

The browser will automatically launch with the dashboard:

Figure 2, Kubernetes dashboard running in your minikube environment

Use the online Katacoda environment

If you don’t want to or you can’t install the tools locally, you can choose a free online environment by using Katacoda.

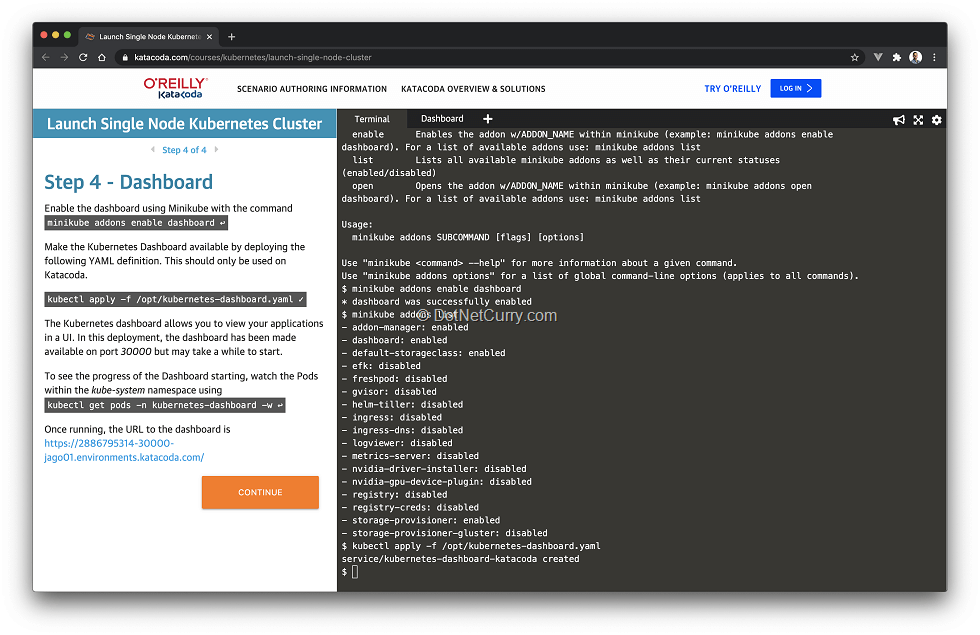

Complete the four steps of the following scenario in order to get your own minikube instance hosted by Katacoda: https://www.katacoda.com/courses/kubernetes/launch-single-node-cluster

At the time of writing, the scenario is free and does not require you to create an account.

By completing all the scenario steps, you will create a Kubernetes cluster using minikube, enable the dashboard addon and launch the dashboard on another tab of your browser. By the end of the four simple steps, you will have a shell where you can run kubectl commands, and the dashboard opened in a separated tab.

Figure 3, setting up minikube online with Katacoda

I will try to note through the article when you will experience different behaviour and/or limitations when using the Katacoda online environment. This might be particularly the case when going through the networking and persistence sections.

Hosting containers: Pods, Namespaces and Deployments

Now that we have a working Kubernetes environment, we should put it to good use. Since one of the best ways to demystify Kubernetes is by using it, the rest of the article is going to be very hands on.

Pod is the unit of deployment in Kubernetes

In the initial architecture section, we saw how in order to deploy a container, you need to create a Pod. That is because the minimum unit of deployment in a Kubernetes cluster is a Pod, rather than a container.

- This indirection might seem arbitrary at first. Why not use a container as the unit of deployment rather than a Pod? The reason is that Pods can include more than just a container, where all containers in the same pod share the same network space (they see each other as localhost) and the same volumes (so they can share data as well). Some advanced patterns take advantage of several containers like init containers or sidecar containers.

- That’s not to say you should add all the containers that make your application inside a single Pod. Remember a Pod is the unit of deployment, so you want to be able to deploy and scale different Pods independently. As a rule of thumb, different application services or components should become independent Pods.

- In addition, while Pods are first type of Kubernetes object we will see through the article, soon we will see others like Namespaces and Deployments.

If this seems confusing, you can assume for the rest of the article that Pod == 1 container as we won’t use the more advanced features. As you get more comfortable with Kubernetes, the difference between Pod and container will became easier to understand.

In order to deploy a Pod, we will describe it using YAML. Create a file hello.yaml with the following contents:

apiVersion: v1

kind: Pod

metadata:

name: my-first-pod

spec:

containers:

- image: hello-world

name: hello

These files are called manifests. It is just YAML that describes one or more Kubernetes objects. In the previous case, the manifest file we have created contains a single object:

- The kind: Pod indicates the type of object

- The metadata allows us to provide additional meta information about the object. In this case, we have just given the Pod a name. This is the minimum metadata piece required so Kubernetes can identify each Pod. We will see more in a minute when we take a look at the idea of Namespace.

- The spec is a complex property that describes what you want the cluster to do with this particular Pod. As you can see, it contains a list of containers where we have included the single container we want to host. The container image tells Kubernetes where to download the image from. In this case, is just the name of a public docker image found in Docker Hub, the hello-world container

- Note you are not restricted to using public Docker Hub images. You can also use images from private registries as in registry.my-company.com/my-image:my-tag. (Setting up a private registry and configuring the cluster with credentials is outside the article scope. I have however provided some pointers at the end of the article).

Let’s ask the cluster to host this container. Simply run the kubectl command

$ kubectl apply -f hello.yaml

pod/my-first-pod created

In many terminals you can use a Heredoc multiline string and directly pass the manifiest without the need to create a file. For example in mac/linux you can use:

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: my-first-pod

spec:

containers:

- image: hello-world

name: hello

EOF

Get comfortable writing YAML and applying it to the cluster. We will be doing that a lot through the article! If you want to keep editing and applying the same file, you just need to separate the different objects with a new line that contains: —

Once created, we can then use kubectl to inspect the list of existing Pods in the cluster. Run the following command:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

my-first-pod 0/1 CrashLoopBackOff 4 2m45s

Note, it shows as not ready and has status of CrashLoopBackOff. That is because Kubernetes expects Pod containers to keep running. However, the hello-world container we have used simply prints a message to the console and terminates. Kubernetes sees that as a problem with the Pod and tries to restart it.

There are specialized Kubernetes objects such as Jobs or Cronjobs that can be used for cases where containers are not expected to run forever.

Let’s verify that it indeed runs as expected by inspecting the logs of the pod’s container (i.e., what the container wrote to the standard output). Given we have just run the hello-world container, we expect to see a greeting message written to the console:

$ kubectl logs my-first-pod

Hello from Docker!

This message shows that your installation appears to be working correctly.

... omitted ...

For more examples and ideas, visit:

https://docs.docker.com/get-started/

You can also see the Pod in the dashboard, as well as its container logs:

Figure 4, Inspecting the container logs in the dashboard

If you want to cleanup and remove the Pod, you can use either of these commands:

kubectl delete -f hello.yaml

kubectl delete pod my-first-pod

Organizing Pods and other objects in Namespaces

Now that we know what a Pod is and how to create them, lets introduce the Namespace object. The purpose of the Namespace is simply to organize and group the objects created in a cluster, like the Pod we created before.

If you imagine a real production cluster, there will be many Pods and other objects created. By grouping them in Namespaces, developers and administrators can define policies, permissions and other settings that affect all objects in a given Namespace, without necessarily having to list each individual object. For example, an administrator could create a production Namespace and assign different permissions and network policies to any object that belong to that namespace.

If you list the existing namespaces with the following command, you will see the cluster already contains quite a few Namespaces. That’s due to the system components needed by Kubernetes and the addons enabled by minikube:

$ kubectl get namespace

NAME STATUS AGE

default Active 125m

kube-node-lease Active 125m

kube-public Active 125m

kube-system Active 125m

kubernetes-dashboard Active 114m

You can see the objects in any of these namespaces by adding the -n {namespace} parameter to a kubectl command. For example, you can see the dashboard pods with:

kubectl get pod -n kubernetes-dashboard

This means in Kubernetes, every object you create has at least two metadata values which are used for identification and management:

- The namespace. This is optional, when omitted the default namespace is used.

- The name of the object. This is required and must be unique within the namespace.

The Pod we created earlier used the default namespace since no specific one was provided. Let’s create a couple of namespaces and a Pod inside each namespace:

apiVersion: v1

kind: Namespace

metadata:

name: my-namespace1

---

apiVersion: v1

kind: Namespace

metadata:

name: my-namespace2

---

apiVersion: v1

kind: Pod

metadata:

name: my-first-pod

namespace: my-namespace1

spec:

containers:

- image: hello-world

name: hello

---

apiVersion: v1

kind: Pod

metadata:

name: my-first-pod

namespace: my-namespace2

spec:

containers:

- image: hello-world

name: hello

There are a few interesting bits in the manifest above:

- You create namespaces by describing them in the manifest. A namespace is just an object with kind: Manifest.

- Namespaces have to be created before they can be referenced by Pods and other objects. (Otherwise you would get an error)

- Note how both pods have the same name. That is fine since they belong to different namespaces.

During the rest of the tutorial, we will keep things simple and use the default namespace.

Running multiple replicas with Deployments (example with ASP.NET Core Container)

Kubernetes would hardly achieve its state goals automate the deployment, scaling and management of containerized applications if you had to manually manage each single container.

While Pods are the foundation and the basic unit for deploying containers, you will hardly use them directly. Instead you would use a higher level abstraction like the Deployment object. The purpose of the Deployment is to abstract the creation of multiple replicas of a given Pod.

With a Deployment, rather than directly creating a Pod, you give Kubernetes a template for creating Pods, and specify how many replicas do you want. Let’s create one using an example ASP.NET Core container:

apiVersion: apps/v1

kind: Deployment

metadata:

name: aspnet-sample-deployment

spec:

replicas: 1

selector:

matchLabels:

app: aspnet-sample

template:

metadata:

labels:

app: aspnet-sample

spec:

containers:

- image: mcr.microsoft.com/dotnet/core/samples:aspnetapp

name: app

This might seem complicated, but it’s a template for creating Pods like I mentioned above. The Deployment spec contains:

- The desired number of replicas.

- A template which defines the Pod to be created. This is the same than the spec field of a Pod.

- A selector which gives Kubernetes a way of identifying the Pods created using the template. In this case, the template creates Pods using a specific label and the selector finds Pods that match that label.

After applying the manifest, run the following two commands. Note how the name of the deployment matches the one in the manifest, while the name of the Pod is pseudo-random:

$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

aspnet-sample-deployment 1/1 1 1 99s

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

aspnet-sample-deployment-868f89659c-cw2np 1/1 Running 0 102s

This makes sense since the number of Pods is completely driven by the replicas field of the Deployment, which can be increased/decreased as needed.

Let’s verify that Kubernetes will indeed try to keep the number replicas we defined. Delete the current pod with the following command (use the pods name generated in your system from the output of the command above):

kubectl delete pod aspnet-sample-deployment-868f89659c-cw2np

Then notice how Kubernetes has immediately created a new Pod to replace the one we just removed:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

aspnet-sample-deployment-868f89659c-7qwkz 1/1 Running 0 45s

Change the number of replicas to two and apply the manifest again. Notice how if you get the pods, this time there are two created for the Deployment:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

aspnet-sample-deployment-868f89659c-7bfxz 1/1 Running 0 1s

aspnet-sample-deployment-868f89659c-7qwkz 1/1 Running 0 2m8s

Now set the replicas to 0 and apply the manifest. This time there will be no pods! (might take a couple of seconds to cleanup)

$ kubectl get pod

No resources found in default namespace.

As you can see, Kubernetes is trying to make sure there is always as many Pods as the desired number of replicas.

While Deployment is one of the most common way of scaling containers, it is not the only way to manage multiple replicas. StatefulSets are designed for running stateful applications like databases. DaemonSets are designed to simplify the use case of running one replica in each node. CronJobs are designed to run a container on a given schedule.

These cover more advanced use cases and although outside of the scope of the article, you should take a look at them before planning any serious Kubernetes usage. Also make sure to check the section Beyond the Basics at the end of the article and explore concepts like resource limits, probes or secrets.

Exposing applications to the network: Services and Ingresses

We have seen how to create Pods and Deployments that ultimately host your containers. Apart from running the containers, it’s likely you want to be able to talk to them. This might be either from outside the cluster or from other containers inside the cluster.

In the previous section, we deployed a sample ASP.NET Core application. Let’s begin to see if we can send an HTTP request to it. (Make sure to change the number of replicas back to 1).

Using the following command, we can retrieve the internal IP of the Pod:

$ kubectl describe pod aspnet-sample-deployment

Name: aspnet-sample-deployment-868f89659c-k7bvr

Namespace: default

... omitted ...

IP: 172.17.0.6

... omitted ...

In this case, the Pod has an internal IP of 172.17.0.6. Other Pods can send traffic by using this IP. Let’s try this by adding a busybox container with curl installed, so we can send an HTTP request. The following command adds the busybox container and opens a shell inside the container:

$ kubectl run -it --rm --restart=Never busybox --image=yauritux/busybox-curl sh

If you dont see a command prompt, try pressing enter.

/ #

The command asks Kubernetes to run the command “sh”, enabling the interactive mode -it (so you can attach your terminal) in a new container named busybox using a specific image yauritux/busybox-curl that comes with curl preinstalled. This gives you a terminal running inside the cluster, which will have access to internal addresses like that of the Pod. This container is removed as soon as you exit the terminal due to the –rm –restart=Never parameters.

Once inside, you can now use curl as in curl http://172.17.0.6 and you will see the HTML document returned by the sample ASP.NET Core application.

/home # curl http://172.17.0.6

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Home page - aspnetapp</title>

<link rel="stylesheet" href="/lib/bootstrap/dist/css/bootstrap.min.css" />

<link rel="stylesheet" href="/css/site.css" />

</head>

<body>

... omitted ...

Using Services to allow traffic between Pods

It is great that we can simply send requests using the IP of the Pod. However, depending on the Pod, IP is not a great idea:

- What would happen if you were to use two replicas instead of one? Each replica would get its own IP, which one would you use in your client application?

- What happens if a replica is terminated and recreated by Kubernetes? Its IP will likely change, how would you keep your client application in sync?

To solve this problem, Kubernetes provides the Service object. This type of object provides a new abstraction that lets you:

· Provide a host name that you can use instead of the Pod IPs

· Load balance the requests across multiple Pods

Figure 5, simplified view of a default Kubernetes service

As you might suspect, a service is defined via its own manifest. Create a service for the deployment created before by applying the following YAML manifest:

apiVersion: v1

kind: Service

metadata:

name: aspnet-sample-service

spec:

selector:

app: aspnet-sample

ports:

- port: 80

targetPort: 80

The service manifest contains:

- a selector that matches the Pods to which traffic will be sent

- a mapping of ports, declaring which container port (targetPort) map as the service port (port). Note the test ASP.NET Core application listens on port 80, see https://hub.docker.com/_/microsoft-dotnet-core-samples/

After you have created the service, you should see it when running the command kubectl get service.

Let’s now verify it is indeed allowing traffic to the aspnet-sample deployment. Run another busybox container with curl. Note how this time you can test the service with curl http://aspnet-sample-service (which matches the service name)

$ kubectl run -it --rm --restart=Never busybox --image=yauritux/busybox-curl sh

If you dont see a command prompt, try pressing enter.

/ # curl http://aspnet-sample-service

<!DOCTYPE html>

... omitted ...

If the service was located in a different namespace than the Pod sending the request, you can use as host name serviceName.namespace. That would be http://aspnet-sample-service.default in the previous example.

Having a way for Pods to talk to other Pods is pretty handy. But I am sure you will eventually need to expose certain applications/containers to the world outside the cluster!

That’s why Kubernetes lets you define other types of services, where the default one we used in this section is technically a ClusterIP service). In addition to services, you can also use an Ingress to expose applications outside of the cluster.

We will see one of these services (the NodePort) and the Ingress in the next sections.

Using NodePort services to allow external traffic

The simplest way to expose Pods to traffic coming from outside the cluster is by using a Service of type NodePort. This is a special type of Service object that maps a random port (by default in the range 30000-32767) in every one of the cluster nodes (Hence the name NodePort). That means you can then use the IP of any of the nodes and the assigned port in order to access your Pods.

Figure 6, NodePort service in a single node cluster like minikube

Figure 6, NodePort service in a single node cluster like minikube

It is a very common use case to define these in an ad-hoc way, particularly during development. For this reason, let’s see how to create it using a kubectl shortcut rather than the YAML manifest. Given the deployment aspnet-sample-deployment we created earlier, you can create a NodePort service using the command:

kubectl expose deployment aspnet-sample-deployment --port=80 --type=NodePort

Once created, we need to find out to which node port was the service assigned:

$ kubectl get service aspnet-sample-deployment

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aspnet-sample-deployment NodePort 10.110.202.168 <none> 80:30738/TCP 91s

You can see it was assigned port 30738 (this might vary in your machine).

Now in order to open the service in the browser, you would need to find the IP of any of the cluster nodes, for example using kubectl describe node. Then you would navigate to port 30738 in any of those node IPs.

However, when using minikube, we need to ask minikube to create a tunnel between the VM of our local cluster and our local machine. This is as simple as running the following command:

minikube service aspnet-sample-deployment

And as you can see, the sample ASP.NET Core application is up and running as expected!

Figure 7, the ASP.NET Core application exposed as a NodePort service

If you are running in Katacoda, you won’t be able to open the service in the browser using the minikube service command. However, once you get the port assigned to the NodePort service, you can open that port by clicking on the + icon at the top of the tabs, then click “Select port to view on Host 1”]

Figure 8, opening the NodePort service when using Katacoda

Using an Ingress to allow external traffic

NodePort services are great for development and debugging, but not something you really want to depend on for your deployed applications. Every time the service is created, a random port is assigned, which could quickly become a nightmare to keep in sync with your configuration.

That is why Kubernetes provides another abstraction design for exposing primarily HTTP/S services outside the cluster, the Ingress object. The Ingress provides a map between a specific host name and a regular Kubernetes service. For example:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webtool

spec:

rules:

- host: aspnet-sample-deployment.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: aspnet-sample-service

port:

number: 80

Note for versions prior to Kubernetes 1.19 (you could check the server version returned by kubectl version), the schema of the Ingress object was different. You would create the same Ingress as:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webtool

spec:

rules:

- host: aspnet-sample-deployment.io

http:

paths:

- backend:

serviceName: aspnet-sample-service

servicePort: 80

We are essentially mapping the host aspnet-sample-deployment.io to the very same regular service we created earlier in the article, when we first introduced the Service object.

How the Ingress works is via an Ingress controller deployed on every node of the cluster. This controller listens on port 80/443 and redirects requests to internal services based on the mapping from all the Ingress objects defined.

Figure 9, service exposed through an Ingress in a single node cluster

Now all you need is to create a DNS entry that maps the host name to the IP of the node. In clusters with multiple nodes, this is typically combined with some form of load balancer in front of all the cluster nodes, so you create DNS entries that point to the load balancer.

Let’s try this locally. Begin by enabling the ingress addon in minikube as in:

minikube addons enable ingress

Note in mac you might need to recreate your minikube environment using minikube start –vm=true

Then apply Ingress manifest defined above. Once the ingress is created, we are ready to send a request to the cluster node in port 80! All we need is to create a DNS entry, for which we will simply update our host file.

Get the IP of the machine hosting your local minikube environment:

$ minikube ip

192.168.64.13

Then update your hosts file to manually map the host name aspnet-sample-deployment.io to the minikube IP returned in the previous command (The hosts file is located at /etc/hosts in Mac/Linux and C:\Windows\System32\Drivers\etc\hosts in Windows). Add the following line to the hosts file:

192.168.64.13 aspnet-sample-deployment.io

After editing the file, open http://aspnet-sample-deployment.io in your browser and you will reach the sample ASP.NET Core application through your Ingress.

Figure 10, the ASP.NET Core application exposed with an Ingress

Kubernetes – Beyond the basics

The objects we have seen so far are the core of Kubernetes from a developer point of view. Once you feel comfortable with these objects, the next steps would feel much more natural.

I would like to use this section as a brief summary with directions for more advanced contents that you might want (or need) to explore next.

More on workloads

You can inject configuration settings as environment variables into your Pod’s containers. See the official docs. Additionally, you can read the settings from ConfigMap and Secret objects, which you can directly inject as environment variables or even mount as files inside the container.

We briefly mentioned at the beginning of the article that Pods can contain more than one container. You can explore patterns like init containers and sidecars to understand how and when you can take advantage of this.

You can also make both your cluster and Pods more robust by taking advantage of:

- Container probes, which provide information to the control plane on whether the container is still alive or whether is ready to receive traffic.

- Resource limits, which declare how much memory, cpu and other finite resources does your container need. This lets the control plane make better scheduling decisions and balance the different containers across the cluster nodes.

Persisting data

Any data stored in a container is ephemeral. As soon as the container is terminated, that data will be gone. Therefore, if you need to permanently keep some data, you need to persist it somewhere outside the container. This is why Kubernetes provides the PersistentVolume and PersistentVolumeClaim abstractions.

While the volumes can be backed by folders in the cluster nodes (using emptyDir volume type), these are typically backed by cloud storage such as AWS EBS or Azure Disks.

Also remember that we mentioned StatefulSets as the recommended workload (rather than Deployments) for stateful applications such as databases.

CI/CD

In the article, we have only used containers that were publicly available in Docker Hub. However, it is likely that you will be building and deploying your own application containers, which you might not want to upload to a public docker registry like Docker Hub. In those situations:

- you will typically configure a private container registry. You could use a cloud service like AWS ECR or Azure ACR or hosting your instance of a service like Nexus or Artifactory.

- You then configure your cluster with the necessary credentials so it can pull the images from your private registry.

Another important aspect will be describing your application as a set of YAML files with the various objects. I would suggest getting familiar with Helm and considering helm charts for that purpose.

Finally, this might be a good time to think about CI/CD.

- Keep using your preferred tool for CI, where you build and push images to your container registry. Then use a gitops tool like argocd for deploying apps to your cluster.

- Clouds like Azure offer CI/CD solutions, like Azure DevOps, which integrate out of the box with clusters hosted in the same cloud. Note Azure DevOps has been recently covered in a previous edition of the magazine.

Cloud providers

Many different public clouds provide Kubernetes services. You can get Kubernetes as a service in Azure, AWS, Google cloud, Digital Ocean and more.

In addition, Kubernetes has drivers which implement features such as persistent volumes or load balancers using specific cloud services.

Interesting tools you might want to consider

External-dns and cert-manager are great ways to automatically generate both DNS entries and SSL certificates directly from your application manifest.

Velero is an awesome tool for backing up and restoring your cluster, including data in persistent volumes.

The Prometheus and Grafana operator provide the basis for monitoring your cluster. With the fluentd operator you can collect all logs are send them to a persistent destination.

Network policies, RBAC and resource quotas are the first stops when sharing a cluster between multiple apps and/or teams.

If you want to secure your cluster, trivy and anchore can scan your containers for vulnerabilities, falco can provide runtime security and kube-bench runs an audit of the cluster configuration.

..and many, many more than I can remember or list here.

Conclusion

Kubernetes can be quite intimidating to get started with. There is plenty of new concepts and tools to get used to, which can make running a single container for the first time a daunting task.

However, it is possible to focus on the most basic functionality and elements that let you, a developer, deploy an application an interact with it.

I have spent the last year helping my company transition to Kubernetes, with dozens of developers having to ramp up in Kubernetes in order to achieve their goals. In my experience, getting these fundamentals right, is key. Without them, I have seen individuals and teams getting blocked or going down the wrong path, both ending in frustration and wasted time.

Regardless of whether you are simply curious about Kubernetes or embracing it at work, I hope this article helped you getting these basic concepts and sparked your interest. In the end, the contents here are but a tiny fraction of everything you could learn about Kubernetes!

This article was technically reviewed by Subodh Sohoni.

This article has been editorially reviewed by Suprotim Agarwal.

C# and .NET have been around for a very long time, but their constant growth means there’s always more to learn.

We at DotNetCurry are very excited to announce The Absolutely Awesome Book on C# and .NET. This is a 500 pages concise technical eBook available in PDF, ePub (iPad), and Mobi (Kindle).

Organized around concepts, this Book aims to provide a concise, yet solid foundation in C# and .NET, covering C# 6.0, C# 7.0 and .NET Core, with chapters on the latest .NET Core 3.0, .NET Standard and C# 8.0 (final release) too. Use these concepts to deepen your existing knowledge of C# and .NET, to have a solid grasp of the latest in C# and .NET OR to crack your next .NET Interview.

Click here to Explore the Table of Contents or Download Sample Chapters!

Was this article worth reading? Share it with fellow developers too. Thanks!

Daniel Jimenez Garciais a passionate software developer with 10+ years of experience who likes to share his knowledge and has been publishing articles since 2016. He started his career as a Microsoft developer focused mainly on .NET, C# and SQL Server. In the latter half of his career he worked on a broader set of technologies and platforms with a special interest for .NET Core, Node.js, Vue, Python, Docker and Kubernetes. You can

check out his repos.