Almost a year and half ago, Clemens Vasters (Architect at Microsoft) tweeted saying both Updates and Deletes (in databases) result in Destruction of data. This struck me as a very interesting take on maintenance of Historical data. It turns out, it’s not an Architectural fantasy but a hard bound requirement in the Finance world where ‘destruction’ of any type of data is deemed a criminal offense.

Ever since that Tweet from Clemens, I have been meaning to explore this pattern. Finally, when suggestions for an Architecture focused article in the magazine popped up, I decided to do a deep dive in what I refer to as - a Lossless Information System design.

A Lossless Information System (LLIS) is one that ensures that every data operation in it, is tracked and no information is ever deleted (archived, but not deleted). Projects in Finance domain are the biggest targets for Lossless Information Systems because of stringent Audit trail requirements. This type of data is often referred to as immutable data. Today we will see how we can build such a system using a traditional RDBMS solution.

The DotNetCurry .NET Magazine – A Free High Quality Digital Magazine for .NET professionals is published once every two months. Subscribe to this eMagazine for Free and get access to hundreds of free tutorials from experts

A Little History of LLIS

LLIS is not a new concept and has been academically researched extensively in the 1980s and 90s. One of the notable works on LLIS is an IEEE paper by Bhargava, G and Gadia S.K. Their paper was published in IEEE journal – IEEE Transactions on Knowledge and Data Engineering in Feb 1993 and this article leans heavily on the paper for theoretical relevance.

For simplicity, we will use the scenario where we need to save User Account information in a way that every change is tracked, leaving us an easy to trace history trail. Our sample schema will save UserAccount + UserDepartment association information.

As we see above, the two tables store information about a user and their department. [UserDepartment.UserID] is the foreign key (FK) to the UserAccount table.

Traditional Usage and limitations

Traditional Usage of the above schema is to simply timestamp on creation and modify the UpdatedBy timestamp at the time of updates. It provides a creation history and the last update history. It depends on backend archival and backup systems to retrieve old data. This suffices where audit requirements do not mandate exhaustive history. However, Finance domain audit requirements often mandate that data once entered in a system, should never be destroyed. This implies every bit of data be traceable. This gives us the initial impression that data cannot be deleted or in other words the DELETE operation is restricted. However, if we look deeper we will realize that even updating a value from False to True is in fact ‘destruction’ of data. The value of False is now ‘Replaced’ and hence no longer retrievable and thus considered ‘destroyed’. This extends our restrictions to DELETE and UPDATE operations.

In a world of transactional RDBMS when CREATE, READ, UPDATE and DELETE is the norm, taking out UPDATE and DELETE kind of throws a major spanner in the works, at least on the outset. Let’s see what we can do to try and conform to this ‘requirement’.

Modifying Schema for History Tracking

As we see above, we have split each table into an Id table and an Operations table. The ID table has what is referred to as non-volatile data (because a User.Id value is immutable throughout the lifetime of the entity). The volatile data, data that is updated in the lifetime of the entity, is moved to the Operations table. Also notice the Action column that has been added to the volatile data table. This can have three values C(reate), U(pdate) and D(elete), depending on the action. Id is the auto-increment PK.

Database Operations

Given the above changes, let’s see how we can do the CRUD operations or their equivalents.

CREATE Operation

This involves Inserting a row of data in the Id table. This gives us the Primary Key to be used in the Operations table. Once we have the Primary Key, we insert the volatile data in the Operations table with the action flag value of ‘C’ (for create).

UPDATE Operation

To update the data entered above, we do another INSERT operation. However we need to touch (the volatile) data that’s in the operations table only. So an UPDATE operation actually does an INSERT with the Action flag set to ‘U’. All fields that have been updated in that operation are INSERTed in the operations table.

DELETE Operation

To delete data, we essentially mark an entry as deleted by inserting a row in the operations table with the Action flag ‘D’.

READ Operation

Now that we have seen all the update operations, we have one challenge to overcome. That is SELECT. Since we have multiple possible rows of volatile data (in the operations table) for each non-volatile data item, the SELECT operation needs to be able to select the Active or the most recent ‘row’ of data. Max value of the Id column in the operations table, for a given Foreign Key (UserAccountId or UserDepartmentId) will always have the latest value. So in our filter, we check for the Max value of the Id of the Operations table for each row in the Id table. Next we check if the latest field’s Action is ‘D’ and exclude all rows marked as such. This returns us the last created/updated data that is not deleted.

For easy access to this set of data, we create a View on top of the Id and Operations tables with the above filtering constraints.

Implementing the LLIS

Verifying data at SQL Server

Before we get into MVC and EntityFramework, let’s validate our design by entering some data in our tables. Let’s say we want to Create a new User ‘Sumit’ at location ‘Pune’, we would fire the following two queries

INSERT INTO UserAccounts (CreatedById, CreatedDate)

Values(1, GETDATE());

INSERT INTO UserAccountOperations

([UserAccountId]

,[UserName]

,[Address]

,[Action]

,[UpdatedById]

,[UpdatedDate])

VALUES

(@@IDENTITY

,'Sumit'

,'Pune'

,'C'

,1

,GETDATE())

The UserAcccountOperations table is using the Identity key from the UserAccounts table and updating itself with the required data and status flag as ‘C’

Once this data is entered, execute the following query

SELECT

UserAccounts.Id, UserAccounts.

CreatedById, UserAccounts.

CreatedDate, UserAccountOperations.

Id

AS

OperationsId, UserAccountOperations.UserName, UserAccountOperations.Address, UserAccountOperations.Action, UserAccountOperations.UpdatedById,

UserAccountOperations.UpdatedDate

FROM

UserAccountOperations INNER JOIN UserAccounts ON

UserAccountOperations.UserAccountId = UserAccounts.Id

WHERE

(UserAccountOperations.Action <> 'D') AND

(UserAccountOperations.Id =

(SELECT MAX(Id) AS MaxId

FROM UserAccountOperations AS UAO

WHERE (UserAccountId = UserAccountOperations.UserAccountId)

)

)

This query returns the following data

Now we have two rows in the Operations table for the same user, but if we use the query for our View, we will get only the last UPDATED row.

To delete this row of data we again do an INSERT operation into UserAccountOperations table with the ActionFlag set to ‘D’.

INSERT INTO UserAccountOperations

([UserAccountId]

,[UserName]

,[Address]

,[Action]

,[UpdatedById]

,[UpdatedDate])

VALUES

(@@IDENTITY

,'Sumit'

,'Mumbai'

,'D'

,1

,GETDATE())

Now when we use the ‘View’ query, we end up with no rows of data even though we actually have three historic rows of data. The following query shows us the History

SELECT * FROM UserAccountOperations WHERE UserAccountId = 1

Creating an ASP.NET Application for a Proof of Concept

We create an ASP.NET WebAPI Solution and add three Class library projects to it.

Along with the View project, we have the CompositionRoot for mapping dependencies, Data for interacting with Database using EntityFramework, Domain layer for defining Business Entities and constraints. In the View layer we make use of Ninject as our Dependency Injection framework.

Generating the Data Layer using EF Database

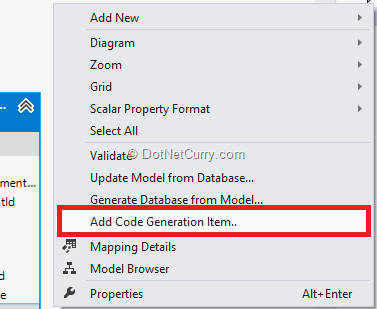

Since we designed the DB Schema first, we’ll use the Entity Framework EDMX Designer to reverse engineer our data layer. Once we have the DB Diagram we right click on it and add the Code Generation T4 file EF5.x Db Context Generator. This T4 template ensures the entities generated via the EMDX are EF Code First compliant.

Setting up Repository

In the Domain class, we’ll setup the Repository Interface ISqlServerRepository

public interface IUserDataRepository

{

User CreateUser(User newUser);

User UpdateUser(User existingUser);

User SelectUser(int userId);

IEnumerable SelectAllUsers();

IEnumerable SelectAllUsersHistory();

IEnumerable SelectUserHistory(int userId);

bool DeleteUser(int userId);

UserDepartment CreateUserDepartment(UserDepartment newUser);

UserDepartment UpdateUserDepartment(UserDepartment existingUser);

UserDepartment SelectUserDepartment(int userId);

IEnumerable SelectAllUserDepartments();

bool DeleteUserDepartment(int userId);

}

Here User and UserDepartment are domain objects that don’t map to the database schema, instead they encapsulate data that the User and UserDeparment entities should contain from a business perspective.

In the Llis.Data library that contains the EF Code first data objects, we implement the IUserDataRepository in SqlServerRepository class. This is where we deviate from traditional implementations. Instead of using the DBContext to call the respective operations, we change the implementation such that the Updates and Deletes are replaced by Insert statements and the Read/Select operation uses the View Poco to retrieve required data.

Setting up Ninject

To effectively use the Repository pattern, we will go with a DI framework. Ninject is pretty easy to use and get started with. If you are new to it, read Dependency Injection Using Ninject In ASP.NET MVC. In the Llis.View project add the Ninject package via Nuget. The Console command is

Install-package Ninject

Next we add the MVC extensions, these are a part of the Ninject.MVC3 package. Don’t be alarmed they work with MVC4 just fine.

Install-package Ninject.MVC3

Now that these two dependencies are in place, open the App_Start\NinjectWebCommon.cs file that’s added by the Ninject.MVC3 package. This class is responsible for hooking Ninject on to MVC as the DI Framework. Here update the empty RegisterServices method as follows

private static void RegisterServices(IKernel kernel)

{

kernel.Load(Assembly.GetExecutingAssembly(),

Assembly.Load("Llis.Domain"),

Assembly.Load("Llis.Data"),

Assembly.Load("Llis.CompositionRoot"));

}

We are providing Ninject will all possible dependencies in that might have the Concrete implementations of interfaces that we may need. Next in the Llis.CompositionRoot project we add the NInject package first and then add a class called DependencyMapper as follows:

public class DependencyMapper : NinjectModule

{

public override void Load()

{

this.Bind().To();

}

}

The CompositionRoot library is traditionally where an IoC container composes objects. In our case, the Interface and Implementation are in different libraries (justifiably so) as we don’t want the View layer to carry any direct dependency of the Data layer. We use the CompositionRoot to bridge this problem.

Please note, all Interfaces whose concrete instances are required, need to be registered here.

Setting up an Index and History View

Now that we have setup the backend, let’s create a couple of Views, one for the Index and one for the History of User data. The data is returned to us via the Implementations in the repository. Their implementations are as follows:

IEnumerable SelectAllUsers();

IEnumerable SelectAllUsersHistory();

public IEnumerable SelectAllUsersHistory()

{

List entities = new List();

using (llisdb _context = new llisdb())

{

foreach (var item in _context.UserAccounts.Include("UserAccountOperations"))

{

foreach (var operItem in item.UserAccountOperations)

{

entities.Add(new Domain.Entities.User

{

Id = item.Id,

Address = operItem.Address,

OperationId = operItem.Id,

UserName = operItem.UserName,

Action = operItem.Action,

CreateDate = item.CreatedDate,

UpdatedDate = operItem.UpdatedDate

});

}

}

}

return entities.OrderBy(k => k.OperationId);

}

public IEnumerable SelectAllUsers()

{

List entities = new List();

using (llisdb _context = new llisdb())

{

var items = _context.UserAccountsViews.ToList();

foreach (var operItem in items)

{

entities.Add(new Domain.Entities.User

{

Id = operItem.Id,

Address = operItem.Address,

OperationId = operItem.OperationsId,

UserName = operItem.UserName,

Action = operItem.Action,

CreateDate = operItem.CreatedDate,

UpdatedDate = operItem.UpdatedDate

});

}

}

return entities;

}

As we can see, SelectAllUsersHistory actually fetches data using the User (and UserOperations) tables. This returns us the complete history of the data. SelectAllUsers on the other hand, uses the UserAccountView that returns only the latest data.

From the given script (UserData.sql) if we run the INSERT queries only, we get data as follows:

As we can see in the image, there are 4 Users and they have four corresponding entries in the history table. All their actions are ‘C’ (for Create). Next if we run the Update portion of the included script and refresh the above two page we’ll see data as below

As highlighted in the Users List, the User ‘Sumit’ seems to have moved to ‘Pune’ and the User ‘Akhi’ has moved to ‘Milpitas’.

However if we check the history table User ‘Sumit’ in fact made a pit stop at ‘Milpitas’ before heading out to ‘Pune’. We can also see the time at which the changes were made and in the database we have the UserId to show who made the change.

As a result we now have an easy to maintain Audit trail of our User Data.

Conclusion

Trying to maintain a lossless system has many approaches like copying data over to archive before updating or using built in database transaction logs and so on. When business requirements impose strict restrictions on what DML operations can be allowed, the above explained design can be used to build a simple Loss Less Information System.

There are two major considerations, first being volume of transactions and second being performance. There will be a trade-off, however by baking in reasonable performance expectations with stringent archival policy can ensure performance never goes below given SLA and system remains responsive for the longest period.

Source code for this sample can be downloaded from Github

This article has been editorially reviewed by Suprotim Agarwal.

C# and .NET have been around for a very long time, but their constant growth means there’s always more to learn.

We at DotNetCurry are very excited to announce The Absolutely Awesome Book on C# and .NET. This is a 500 pages concise technical eBook available in PDF, ePub (iPad), and Mobi (Kindle).

Organized around concepts, this Book aims to provide a concise, yet solid foundation in C# and .NET, covering C# 6.0, C# 7.0 and .NET Core, with chapters on the latest .NET Core 3.0, .NET Standard and C# 8.0 (final release) too. Use these concepts to deepen your existing knowledge of C# and .NET, to have a solid grasp of the latest in C# and .NET OR to crack your next .NET Interview.

Click here to Explore the Table of Contents or Download Sample Chapters!

Was this article worth reading? Share it with fellow developers too. Thanks!

Sumit is a .NET consultant and has been working on Microsoft Technologies since his college days. He edits, he codes and he manages content when at work. C# is his first love, but he is often seen flirting with Java and Objective C. You can follow him on twitter at @

sumitkm or email him at sumitkm [at] gmail