Azure Cosmos DB is Microsoft's fully managed globally distributed, multi-model database service "for managing data at planet-scale".

But what do those terms mean?

Does "planet-scale" mean you can scale indefinitely?

Is it really a multi-model Database?

In this tutorial, I'd like to explorer the major components and features that define Azure Cosmos DB, making it a truly unique database service.

Purpose of this Azure Cosmos DB article

This article is meant for developers who want to get a grasp of the concepts, features and advantages they get when using Azure Cosmos DB in distributed environments.

I'm not going to go touch specific detailed aspects, introductions and code samples. These can already be found in abundance across the web, including different tutorials on DotNetCurry.

On the other hand, at times, you need extended knowledge and gain some practical experience to be able to follow the official Azure Cosmos DB documentation. Hence, my aim is to meet the theoretical documentation with a more practical and pragmatic approach, offering a detailed guide into the realm of Azure Cosmos DB, but maintaining a low learning curve.

What is NoSQL?

To start off, we need a high-level overview of the purpose and features of Azure Cosmos DB. And to do that, we need to start at the beginning.

Azure Cosmos DB is a "NoSQL" database.

But what is "NoSQL", and why and how do "NoSQL" databases differ from traditional "SQL" databases?

As defined in Wikipedia; SQL, or Structured Query Language, is a "domain specific" language. It is designed for managing data held in relational database management systems (RDMS). As the name suggests, it is particularly useful in handling structured data, where there are relations between different entities of that data.

So, SQL is a language, not tied to any specific framework or database. It was however standardized (much like ECMA Script became the standard for JavaScript) in 1987, but despite its standardization, most SQL code is not completely portable among different database systems. In other words, there are lots of variants, depending on the underlying RDMS.

SQL has become an industry standard. Different flavours include T-SQL (Microsoft SQL Server), PS/SQL (Oracle) and PL/pgSQL (PostgreSQL); and the standard supports all sorts of database systems including MySQL, MS SQL Server, MS Access, Oracle, Sybase, Informix, Postgres and many more.

Even Azure Cosmos DB supports a SQL Like syntax to query NoSQL data, but more on that later.

So that's SQL, but what do we mean when we say “NoSQL”?

Again, according to Wikipedia, a NoSQL (originally referring to “non-SQL” or “non-relational”) database provides a mechanism for storage and retrieval of data that is modelled different than the tabular relations used in relational databases. It is designed specifically for (but is certainly not limited to) handling Big Data.

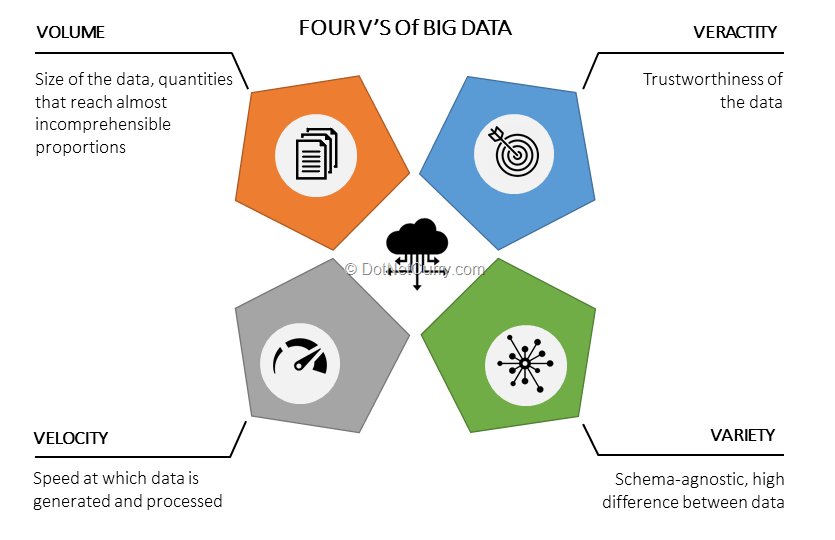

Big Data often is defined by four V's: Volume (high count of record with quantities of data that reach almost incomprehensible proportions), Variety (high difference between different data), Velocity (how fast data is coming in and how fast it is processed) and Veracity (refers to the biases, noise and abnormality in data).

Figure 1: Big Data, Four V’s

Because of their history, strict schema rules and lack (or let's call it challenges) of scaling options; relational databases are simply not optimized to handle such enormous amounts of data.

Relational databases were mostly designed to scale up, by increasing the CPU power and RAM of the hosting server. But, for handling Big Data, this model just doesn’t suffice.

For one, there are limits to how much you can scale up a server. Scaling up also implies higher costs: the bigger the host machine, the higher the end bill will be. And, after you hit the limits of up-scaling, the only real solution (there are workarounds, but no real solutions) is to scale out, meaning the deployment of the database on multiple servers.

Don't get me wrong, relational databases like MS SQL Server and Oracle are battle tested systems, perfectly capable in solving a lot of problems in the current technical landscape. The rise of NoSQL databases does not, in any way, take away their purpose.

But for Big Data, you need systems that embrace the “distributed model”, meaning scale-out should be embraced as a core feature.

In essence, that is what NoSQL is all about - allowing you to distribute databases over different servers, allowing them to handle gigabytes and even petabytes of data.

As they are designed especially for these kinds of cases, NoSQL databases can handle massive amounts of data whilst ensuring and maintaining low latency and high availability. NoSQL databases are simply more suited to handle Big Data, than any kind of RDMS.

NoSQL databases are also considered "schema free". This essentially means that you can have unlimited different data-models (or schemas) within a database. To be clear, the data will always have a schema (or type, or model), but because the schema can vary for each item, there is no schema management. Whilst schema changes in relational databases can pose a challenge, in NoSQL databases, you can integrate schema changes without downtime.

What is Azure Cosmos DB?

So, we now have fairly good idea of what defines a system as a NoSQL database. But what is Azure Cosmos DB, and how does it differ from other frameworks and (NoSQL) databases already available?

Azure Cosmos DB, announced at the Microsoft Build 2017 conference, is an evolution of the former Azure Document DB, which was a scalable NoSQL document database with Low Latency, and hosted on Microsoft's Azure platform.

Azure Cosmos DB allows virtually unlimited scale and automatically manages storage with server-side partitioning to uphold performance. As it is hosted on Azure, a Cosmos DB can be globally distributed.

Cosmos DB supports multiple data models, including JSON, BSON, Table, Graph and Columnar, exposing them with multiple APIs - which is probably why the name "Document DB" didn't suffice any more.

Azure Cosmos DB was born!

Let's sum up the core feature of Azure Cosmos DB:

- Turnkey global distribution.

- Single-digit millisecond latency.

- Elastic and unlimited scalability.

- Multi-model with wire protocol compatible API endpoints for Cassandra, MongoDB, SQL, Gremlin and Table along with built-in support for Apache Spark and Jupyter notebooks.

- SLA for guarantees on 99.99% availability, performance, latency and consistency

- Local emulator

- Integration with Azure Functions and Azure Search.

Let's look at these different features, starting with what makes Azure Cosmos DB a truly unique database service: multi-model with API endpoint support.

Multi-model

SQL (Core) API

Azure Cosmos DB SQL API is the primary API, which lives on from the former Document DB. Data is stored in JSON format. The SQL API provides a formal programming model for rich queries over JSON items. The SQL used is essentially a subset, optimized for querying JSON documents.

Azure Cosmos DB API for Mongo DB

The MongoDB API provides support for MongoDB data (BSON). This will appeal to existing MongoDB developers, because they can enjoy all the features of Cosmos DB, without changing their code.

Cassandra API

The Cassandra API, using columnar as data model, requires you to define the schema of your data up front. Data is stored physically in a column-oriented fashion, so it's still okay to have sparse columns, and it has good support for aggregations.

Gremlin API

The Gremlin API (Graph traversal language) provides you with a "graph database", which allows you to annotate your data with meaningful relationships. You get the graph traversal language that leverages these annotations allowing you to efficiently query across the many relationships that exist in the database.

Table API

The Table API is an evolution of Azure Table Storage. With this API, each entity consists of a key and a value pair. But the value itself, can again contain set of key-value pairs.

Spark

The Spark API enables real-time machine learning and AI over globally distributed data-sets by using built-in support for Apache Spark and Jupyter notebooks.

The choice is yours!

The choice of which API (and underlying data model) to use, is crucial. It defines how you will be approaching your data and how you will query it. But, regardless of which API you choose, all the key features of Azure Cosmos DB will be available.

Internally, Cosmos DB will always store your data as "ARS", or Atom Record Sequence. This format gets projected to any supported data model. No matter which API you choose, your data is always stored as key-values; where the values can be simple values or nested key-values (think of JSON and BSON). This concept allows developers to have all the features, regardless of the model and API they choose.

In this article, we will focus on the SQL API, which is still the primary API that lives on from the original Document DB Service.

Azure Cosmos DB Accounts, Databases and Containers

An Azure Cosmos database is, in essence, a unit of management for a set of schema-agnostic containers. They are horizontally partitioned across a set of machines using "Physical partitions" hosted within one or more Azure regions. All these databases are associated with one Azure Cosmos DB Account.

Figure 2: Simplified Azure Cosmos DB top-level overview

To create an Azure Cosmos DB account, we need to input a unique name, which will represent your endpoint by adding “.documents.azure.com”. Within this account, you can create any number of databases. An account is associated with one API. In the future, it might be possible to freely switch between APIs within the account. At the moment of writing, this is not yet the case. All the databases within one account will always exploit the same API.

You can create an Azure Cosmos DB Account on the Azure portal. I'll quickly walk through the creation of an Azure Cosmos DB account, creating a database and a couple of collections which will serve as sample data for the rest of this article.

Figure 3: Create Azure Cosmos DB Account

For this article, we'll be using a collection of volcanoes to demonstrate some features. The document looks like this:

{

"VolcanoName": "Acatenango",

"Country": "Guatemala",

"Region": "Guatemala",

"Location": {

"type": "Point",

"coordinates": [

-90.876,

14.501

]

},

"Elevation": 3976,

"Type": "Stratovolcano",

"Status": "Historical",

"Last Known Eruption": "Last known eruption in 1964 or later",

"id": "a6297b2d-d004-8caa-bc42-a349ff046bc4"

}

For this data model, we'll create a couple of collections, each with different partition keys. We’ll look into that concept in depth later on in the article, but for now, I’ll leave you with this: A partition key is a pre-defined hint Azure Cosmos DB uses to store and locate your documents.

For example, we’ll define three collections with different partition keys:

- By country (/Country)

- By Type (/Type)

- By Name (/Name)

You can use the portal to create the collections:

Figure 4: Creating Collections and database using Azure portal

All right! We have now set up some data containers with sample data which can be used to explain the core concepts. Let's dive in!

Throughput, horizontal partitioning and global distribution

Throughput

In Azure Cosmos DB, performance of a container (or a database) is configured by defining its throughput.

Throughput defines how many requests can be served within a specific period, and how many concurrent requests can always be served within any given second. It defines how much load your database and/or container needs to be able to handle, at any given moment in time. It is not to be mistaken with latency, which defines how fast a response for a given request is served.

Managing throughput and ensuring predictable performance is configured by assigning Request Units (or RUs). Azure Cosmos DB uses the assigned RUs to ensure that the requested performance can be handled, regardless of how demanding the workload you put on the data in your database, or your collection.

Request Units are a blended measurement of computational cost for any given request. Required CPU calculations, memory allocation, storage, I/O and internal network traffic are all translated into one unit. Simplified, you could say that RUs are the currency used by Azure Cosmos DB.

The Request Unit concept allows you to disregard any concerns about hardware. Azure Cosmos DB, being a database service, handles that for you. You only need to think about the amounts of RUs should be provisioned for a container.

To be clear, a Request Unit is not the same as a Request, as all requests are not considered equal. For example, read requests generally require less resources than write requests. Write requests are generally more expensive, as they consume more resources. If your query takes longer to process, you'll use more resources; resulting in a higher RU cost rate.

For any request, Azure Cosmos DB will tell you exactly how many RUs that request consumed. This allows for predictable performance and costs, as identical requests will always consistently cost the same number of RUs.

In simple terms: RUs allow you to manage and ensure predictable performance and costs for your database and/or data container.

If we take the sample volcano data, you can see the same RU cost for inserting or updating each item.

Figure 5: Insert or update an item in a data container

On the other hand, if we read data, the RU cost will be lower. And for every identical read request, the RU cost will always be the same.

Figure 6: Query RU cost

When you create a Data Container in Cosmos Db, you reserve the number of Request Units per second (RU/s) that needs to be serviced for that container. This is called "provisioning throughput".

Azure Cosmos DB will guarantee that the provisioned throughput can be consumed for the container every second. You will then be billed for the provisioned throughput monthly.

Your bill will reflect the provisions you make, so this is a very important step to take into consideration. By provisioning throughput, you tell Azure Cosmos DB to reserve the configured RU/s, and you will be billed for what you reserve, not for what you consume.

Should the situation arise that the provisioned RU/s are exceeded, further requests are "throttled". This basically means that the throttled request will be refused, and the consuming application will get an error. In the error response, Azure Cosmos DB will inform you how much time to wait before retrying.

So, you could implement a fallback mechanism fairly easily. When requests are throttled, it almost always means that the provisioned RU/s is insufficient to serve the throughput your application requires. So, while it is a good idea to provide a fallback "exceeding RU limit" mechanism, note that you will almost always have to provision more throughput.

Request Units and billing

Provisioning throughput is directly related to how much you will be billed monthly. Because unique requests always result in the same RU cost, you can predict the monthly bill in an easy and correct way.

When you create an Azure Cosmos DB container with the minimal throughput of 400 RU/s, you'll be billed roughly 24$ per month. To get a grasp of how many RU/s you will need to reserve, you can use the Request Unit Calculator. This tool allows you to calculate the required RU/s after setting parameters as item size, reads per second, writes per second, etc. If you don't know this information when you create your containers, don't worry, throughput can be provisioned on the fly, without any downtime.

The calculator can be accessed here: https://cosmos.azure.com/capacitycalculator/

Figure 7: Azure Cosmos DB Request Unit Calculator

Apart from the billing aspect, you can always use the Azure Portal to view detailed charts and reports on every metric related to your container usage.

Partitioning

Azure Cosmos DB allows you to massively scale out containers, not only in terms of storage, but also in terms of throughput. When you provision throughput, data is automatically partitioned to be able to handle the provisioned RU/s you reserved.

Data is stored in physical partitions, which consist of a set of replicas (or clones), also referred to as replica sets. Each replica set hosts an instance of the Azure Cosmos database engine, making data stored within the partition durable, highly available, and consistent. Simplified, a physical partition can be thought of as a fixed-capacity data bucket. Meaning that you can't control the size, placement, or number of partitions.

To ensure that Azure Cosmos DB does not need to investigate all partitions when you request data, it requires you to select a partition key.

For each container, you'll need to configure a partition key. Azure Cosmos DB will use that key to group items together. For example, in a container where all items contain a City property, you can use City as the partition key for that container.

Figure 8: Image logical partition within physical partition

Groups of items that have specific values for City, such as London, Brussels and Washington will form distinct logical partitions.

Partition key values are hashed, and Cosmos DB uses that hash to identify the logical partition the data should be stored in. Items with the same partition key value will never be spread across different logical partitions.

Internally, one or more logical partitions are mapped to a physical partition. This is because, while containers and logical partitions can scale dynamically; physical partitions cannot i.e. you would wind up with way more physical partitions than you would actually need.

If a physical partition gets near its limits, Cosmos DB automatically spins up a new partition, splitting and moving data across the old and new one. This allows Cosmos DB to handle virtually unlimited storage for your containers.

Any property in your data model can be chosen as the partition key. But only the correct property will result in optimal scaling of your data. The right choice will produce massive scaling, while choosing poorly will impede Azure Cosmos DB's ability to scale your data (resulting in so called hot partitions).

So even though you don't really need to know how your data eventually gets partitioned, understanding it in combination with a clear view of how your data will be accessed, is absolutely vital.

Choosing your partition key

Firstly, and most importantly, queries will be most efficient if they can be scoped to one logical partition.

Next to that, transactions and stored procedures are always bound to the scope of a single partition. So, these make the first consideration on deciding a partition key.

Secondly, you want to choose a partition key that will not cause throughput or storage bottlenecks. Generally, you’ll want to choose a key that spreads the workload evenly across all partitions and evenly over time.

Remember, when data is added to a container, it will be stored into a logical partition. Once the physical partition hosting the logical grows out of bounds, the logical partition is automatically moved to a new physical partition.

You want a partition key that yields the most distinct values as possible. Ideally you want distinct values in the hundreds or thousands. This allows Azure Cosmos DB to logically store multiple partition keys in one physical partition, without having to move partitions behind the scenes.

Take the following rules into consideration upon deciding partition keys:

- A single logical partition has an upper limit of 10 GB of storage. If you max out the limit of this partition, you'll need to manually reconfigure and migrate data to another container. This is a disaster situation you don’t want!

- To prevent the issue raised above, choose a partition key that has a wide range of values and access patterns, that are evenly spread across logical partitions.

- Choose a partition key that spreads the workload evenly across all partitions and evenly over time.

- The partition key value of a document cannot change. If you need to change the partition key, you'll need to delete the document and insert a new one.

- The partition key set in a collection can never change and has become mandatory.

- Each collection can have only one partition key.

Let's try a couple of examples to make sense of how we would choose partition keys, how hot partitions work and how granularity in partition keys can have positive or negative effects in the partitioning of your Azure Cosmos DB. Let’s take the sample volcano data again to visualize the possible scenarios.

Partition key “Type”

When I first looked through the volcano data, “type” immediately stood out as a possible partition key. Our partitioned data looks something like this:

Figure 11: Volcanoes by type

To be clear, I’m in no means a volcano expert, the data repo is for sample purposes only . I have no idea if the situation I invented is realistic - it is only for demo purpose.

Okay, now let’s say we are not only collecting meta-data for each volcano, but also collecting data for each eruption. Which means we look at the collection from a Big Data perspective. Let’s say that “Lava Cone” volcanoes erupted three times as much this year, than previous years. But as displayed in the image, the “Lava Cone” logical partition is already big. This will result in Cosmos DB moving into a new Physical Partition, or eventually, the logical partition will max out.

So, type, while seemingly a good partition key, it is not such a good choice if you want to collect hour to hour data of erupting events. For the meta data itself, it should do just fine.

The same goes for choosing “Country” as a partition key. If for one reason or another, Russia gets more eruptions than other countries, its size will grow. If the eruption data gets fed into the system in real-time, you can also get a so-called hot partition, with Russia taking up all the throughput in the container - leaving none left for the other countries.

If we were collecting data in real-time on a massive scale, the only good partition key in this case is by “Name”. This will render the most distinct values, and should distribute the data evenly amongst the partitions.

Microsoft created a very interesting and comprehensive case study on how to model and partition data on Azure Cosmos DB using a real-world example. You can find the documentation here: https://docs.microsoft.com/en-us/azure/cosmos-db/partition-data.

Cross partition Queries

You will come across situations where querying by partition key will not suffice. Azure Cosmos DB can span queries across multiple partitions, which basically means that it will need to look at all partitions to satisfy the query requirements. I hope it’s clear at this point that you don't want this to happen too frequently, as it will result in high RU costs.

When cross partition queries are needed, Cosmos DB will automatically “fan out the query”, i.e. it will visit all the partition in parallel, gather the results, and then return all results in a single response.

This is more costly than running queries against single partitions, which is why these kinds of queries should be limited and avoided as much as possible. Azure Cosmos DB enforces you to explicitly enable this behaviour by setting it in code or via the portal. Writing a query without the partition key in the where clause, and without explicitly setting the "EnableCrossPartitionQuery" property, will fail.

Back to the sample volcano data, we see the RU cost rise when we trigger a cross partition query. As we saw earlier, the RU cost for querying a collection with partition key “Country” was 7.240 RUs. If we Query that collection by type, the RU cost is 10.58. Now, this might not be a significant rise, but remember, the sample data is small, and the query is executed only once. In a distributed application model, this could be quite catastrophic!

Figure 12: Cross partition query RU cost.

Indexing

Azure Cosmos DB allows you to store data without having to worry about schema or index management. By default, Azure Cosmos DB will automatically index every property for all items in your container.

This means that you don’t really need to worry about indexes, but let’s take a quick look at the indexing modes Azure Cosmos DB provides:

- Consistent: This is the default setting. The indexes are updated synchronously as you create, update or delete items.

- Lazy: Updates to the index are done at a much lower priority level, when the engine is not doing any other work. This can result in inconsistent or incomplete query results, so using this indexing mode is recommended against.

- None: Indexing is disabled for the container. This is especially useful if you need to big bulk operations, as removing the indexing policy will improve performance drastically. After the bulk operations are complete, the index mode can be set back to consistent, and you can check the IndexTransformationProgress property on the container (SDK) to the progress.

You can include and exclude property paths, and you can configure three kinds of indexes for data. Depending on how you are going to query your data, you might want to change the index on your colums:

- Hash index: Used for equality indexes.

- Range index: This index is useful if you have queries where you use range operations (< > !=), ORDER BY and JOIN.

- Spatial Index: This index is useful if you have GeoJSON data in your documents. It supports Points, LineStrings, Polygons, and MultiPolygons.

- Composite Index: This index is used for filtering on multiple properties.

As indexing in Azure Cosmos DB is performed automatically by default, I won’t include more information here. Knowing how to leverage these different kind of indexing (and thus deviating from the default) in different scenarios can result in lower RU cost and better performance. You can find more info in the official Microsoft documentation, specific to a range of Use Cases.

Global distribution

As said in the intro, Azure Cosmos DB can be distributed globally and can manage data on a planet scale. There are a couple of reasons why you would use Geo-replication for your Cosmos databases:

Latency and performance: If you stay within the same Azure region, the RU/s reserved on a container are guaranteed by the replicas within that region. The more replicas you have, the more available your data will become. But Azure Cosmos DB is built for global apps, allowing your replicas to be hosted across regions. This means the data can be allocated closer to consuming applications, resulting in lower latency and high availability.

Azure Cosmos DB has a multi-master replication protocol, meaning that every region supports both writes and reads. The consuming application is aware of the nearest region and can send requests to that region. This region is identified without any configuration changes. As you add more regions, your application does not need to be paused or stopped. The traffic managers and load balancers built into the replica set, take care of it for you!

Disaster recovery: By Geo-replicating your data, you ensure the availability of your data, even in the events of major failure or natural disasters. Should one region become unavailable, other regions automatically take over to handle requests, for both write and read operations.

Turnkey Global Distribution

In your Azure Cosmos DB Account, you can add or remove regions with the click of a mouse! After you select multiple regions, you can choose to do a manual fail over, or select automatic fail over priorities in case of unavailability. So, if a region should become unavailable for whatever reason, you control which region behaves as a primary replacement for both reads and writes.

You can Geo-replicate your data in every Azure Region that is currently available:

Figure 13: Azure regions currently available

https://azure.microsoft.com/en-us/global-infrastructure/regions/

In theory, global distribution will not affect the throughput of your application. The same RUs will be used for request between regions i.e. a query to one region will have the same RU cost for the same query in another region. Performance is calculated in terms of latency, so actual data over the wire will be affected if the consuming application is not hosted in the same Azure Region. So even though the costs will be the same, latency is a definite factor to take into consideration when Geo-replicating your data.

While there are no additional costs for global distribution, the standard Cosmos DB Pricing will get replicated. This means that your provisioned RU/s for one container will also be provisioned for its Geo-replicated instances. So, when you provisioned 400 RU/s for one container in one region; you will reserve 1200 RU/s if you replicate that container to two other regions.

Multiple Region Write accounts will also require extra RUs. These are used for operations such as managing write conflicts. These extra RUs are billed at the average cost of the provisioned RUs across your account's regions. Meaning if you have three regions configured at 400 RU/s (billed around 24$ per region = 70 $), you'll be billed an additional 24$ for the extra write operations.

This can all be simulated fairly simply by consulting the Cosmos DB Pricing Calculator: https://azure.microsoft.com/en-us/pricing/calculator/?service=cosmos-db.

Replication and Consistency

As Azure Cosmos DB replicates your data to different instances across multiple Azure regions, you need ways to get consistent reads across all the replicas. By applying consistency levels, you get control over possible read version conflicts scattered across the different regions.

There are five consistency levels that you can configure for your Azure Cosmos DB Account:

Figure 14: Consistency levels

These levels, ranging from strong to eventual, allow you to take complete control of the consistency tradeoffs for your data containers. Generally, the stronger your consistency, the lower your performance in terms of latency and availability. Weaker consistency results in higher performance but has possible dirty reads as a tradeoff.

Strong consistency ensures that you never have a dirty read, but this comes at a huge cost in latency. In strong consistency Azure Cosmos DB will enforce all reads to be blocked until all the replicas are updated.

Eventual consistency, on the other side of the spectrum, will offer no guarantee whatsoever that your data is consistent with the other replicas. So you can never know whether the data you are reading is the latest version or not. But this allows Cosmos DB to handle all reads without waiting for the latest writes, so queries can be served much faster.

In practice, you only must take consistency into consideration when you start Geo-replicating your Azure Cosmos databases. It's almost impossible to experience a dirty read if your replicas are hosted within one Azure Region. Those replicas are physically located closely to each other, resulting in data transfer almost always within 1ms. So even with the strong consistency level configured, the delay should never be problematic for the consuming application.

The problem arises when replicas are distributed globally. It can take hundreds of milliseconds to move your data across continents. It is here that chances of dirty reads become exponential, and where consistency levels come into play.

Let's look at the different levels of consistency:

- Strong: No dirty reads, Cosmos DB waits until all replicas are updated before responding to read requests.

- Bounded Staleness: Dirty reads are possibly but are bounded by time and updates.

This means that you can configure the threshold for dirty reads, only allowing them if the data isn't out of date. The reads might be behind writes by at most “X” versions (i.e., “updates”) of an item or by “Y” time interval. If the data is too stale, Cosmos DB will fall back to strong consistency.

- Session Consistency (default): No dirty reads for writers, but possible for other consuming applications.

- All writers include a unique session key in their requests. Cosmos DB uses this session key to ensure strong consistency for the writer, meaning a writer will always read the same the data as they wrote. Other readers may still experience dirty reads.

- When you use the .NET SDK, it will automatically include a session key, making it by default strongly consistent within the session that you use in your code. So within the lifetime of a document client, you're guaranteed strong consistency.

- Consistent prefix: Dirty reads are possible but can never be out-of-order across your replicas.

- So if the same data gets updated six times, you might get version 4 of the data, but only if that version of the data has been replicated to all your replicas. Once version 4 has been replicated, you'll never get a version before that (1-3), as this level ensures that you get updates in order.

- Eventual consistency: Dirty reads possible, no guaranteed order. You can get inconsistent results in a random fashion, until eventually all the replicas get updated. You simply get data from a replica, without any regards of other versions in other replicas.

When Bounded staleness and Session don't apply strong consistency, they fallback to Consistent prefix, not eventual consistency. So even in those cases, reads can never be out-of-order.

You can set the preferred consistency level for the entire Cosmos DB Account i.e. every operation for all databases will use that default consistency strategy. This default can be changed at any time.

It is however possible to override the default consistency at request level, but it is only possible to weaken the default. So, if the default is Session, you can only choose between Consistent or Eventual consistency levels. The level can be selected when you create the connection in the .NET SDK.

Conclusion

Azure Cosmos DB is a rich, powerful and an amazing database service, that can be used in a wide variety of situations and use cases. With this article, I hope I was able to give you a simplified and easy to grasp idea of its features. Especially in distributed applications and in handling Big Data, Azure Cosmos DB stands out of the opposition. I hope you enjoyed the read!

This article has been editorially reviewed by Suprotim Agarwal.

C# and .NET have been around for a very long time, but their constant growth means there’s always more to learn.

We at DotNetCurry are very excited to announce The Absolutely Awesome Book on C# and .NET. This is a 500 pages concise technical eBook available in PDF, ePub (iPad), and Mobi (Kindle).

Organized around concepts, this Book aims to provide a concise, yet solid foundation in C# and .NET, covering C# 6.0, C# 7.0 and .NET Core, with chapters on the latest .NET Core 3.0, .NET Standard and C# 8.0 (final release) too. Use these concepts to deepen your existing knowledge of C# and .NET, to have a solid grasp of the latest in C# and .NET OR to crack your next .NET Interview.

Click here to Explore the Table of Contents or Download Sample Chapters!

Was this article worth reading? Share it with fellow developers too. Thanks!

My name is Tim Sommer. I live in the beautiful city of Antwerp, Belgium. I have been passionate about computers and programming for as long as I can remember. I'm a speaker, teacher and entrepreneur. I'm a Windows Insider MVP.But most of all, I'm a developer, an architect, a technical specialist and a coach; with 8+ years of professional experience in the .NET framework.